| Title: | Data Validation and Organization of Metadata for Local and Remote Tables |

|---|---|

| Description: | Validate data in data frames, 'tibble' objects, 'Spark' 'DataFrames', and database tables. Validation pipelines can be made using easily-readable, consecutive validation steps. Upon execution of the validation plan, several reporting options are available. User-defined thresholds for failure rates allow for the determination of appropriate reporting actions. Many other workflows are available including an information management workflow, where the aim is to record, collect, and generate useful information on data tables. |

| Authors: | Richard Iannone [aut, cre]

|

| Maintainer: | Richard Iannone <[email protected]> |

| License: | MIT + file LICENSE |

| Version: | 0.12.2 |

| Built: | 2024-10-24 04:28:40 UTC |

| Source: | CRAN |

The action_levels() function works with the actions argument that is

present in the create_agent() function and in every validation step

function (which also has an actions argument). With it, we can provide

threshold failure values for any combination of warn, stop, or notify

failure states.

We can react to any entering of a state by supplying corresponding functions

to the fns argument. They will undergo evaluation at the time when the

matching state is entered. If provided to create_agent() then the policies

will be applied to every validation step, acting as a default for the

validation as a whole.

Calls of action_levels() could also be applied directly to any validation

step and this will act as an override if set also in create_agent(). Usage

of action_levels() is required to have any useful side effects (i.e.,

warnings, throwing errors) in the case of validation functions operating

directly on data (e.g., mtcars %>% col_vals_lt("mpg", 35)). There are two

helper functions that are convenient when using validation functions directly

on data (the agent-less workflow): warn_on_fail() and stop_on_fail().

These helpers either warn or stop (default failure threshold for each is set

to 1), and, they do so with informative warning or error messages. The

stop_on_fail() helper is applied by default when using validation functions

directly on data (more information on this is provided in Details).

action_levels(warn_at = NULL, stop_at = NULL, notify_at = NULL, fns = NULL) warn_on_fail(warn_at = 1) stop_on_fail(stop_at = 1)action_levels(warn_at = NULL, stop_at = NULL, notify_at = NULL, fns = NULL) warn_on_fail(warn_at = 1) stop_on_fail(stop_at = 1)

warn_at |

Threshold value for the 'warn' failure state

Either the threshold number or the threshold fraction of failing test

units that result in entering the |

stop_at |

Threshold value for the 'stop' failure state

Either the threshold number or the threshold fraction of failing test

units that result in entering the |

notify_at |

Threshold value for the 'notify' failure state

Either the threshold number or the threshold fraction of failing test

units that result in entering the |

fns |

Functions to execute when entering failure states

A named list of functions that is to be paired with the appropriate failure

states. The syntax for this list involves using failure state names from

the set of |

The output of the action_levels() call in actions will be interpreted

slightly differently if using an agent or using validation functions

directly on a data table. For convenience, when working directly on data, any

values supplied to warn_at or stop_at will be automatically given a stock

warning() or stop() function. For example using

small_table %>% col_is_integer("date") will provide a detailed stop message

by default, indicating the reason for the failure. If you were to supply the

fns for stop or warn manually then the stock functions would be

overridden. Furthermore, if actions is NULL in this workflow (the default),

pointblank will use a stop_at value of 1 (providing a detailed,

context-specific error message if there are any failing units). We can

absolutely suppress this automatic stopping behavior at each validation

step by setting active = FALSE. In this interactive data case, there is no

stock function given for notify_at. The notify failure state is less

commonly used in this workflow as it is in the agent-based one.

When using an agent, we often opt to not use any functions in fns as the

warn, stop, and notify failure states will be reported on when using

create_agent_report() (and, usually that's sufficient). Instead, using the

end_fns argument is a better choice since that scheme provides useful data

on the entire interrogation, allowing for finer control on side effects and

reducing potential for duplicating any side effects.

An action_levels object.

Any threshold values supplied for the warn_at, stop_at, or notify_at

arguments correspond to the warn, stop, and notify failure states,

respectively. A threshold value can either relates to an absolute number of

test units or a fraction-of-total test units that are failing. Exceeding

the threshold means entering one or more of the warn, stop, or notify

failure states.

If a threshold value is a decimal value between 0 and 1 then it's a

proportional failure threshold (e.g., 0.15 indicates that if 15 percent of

the test units are found to be failing, then the designated failure state

is entered). Absolute values starting from 1 can be used instead, and this

constitutes an absolute failure threshold (e.g., 10 means that if 10 of the

test units are found to be failing, the failure state is entered).

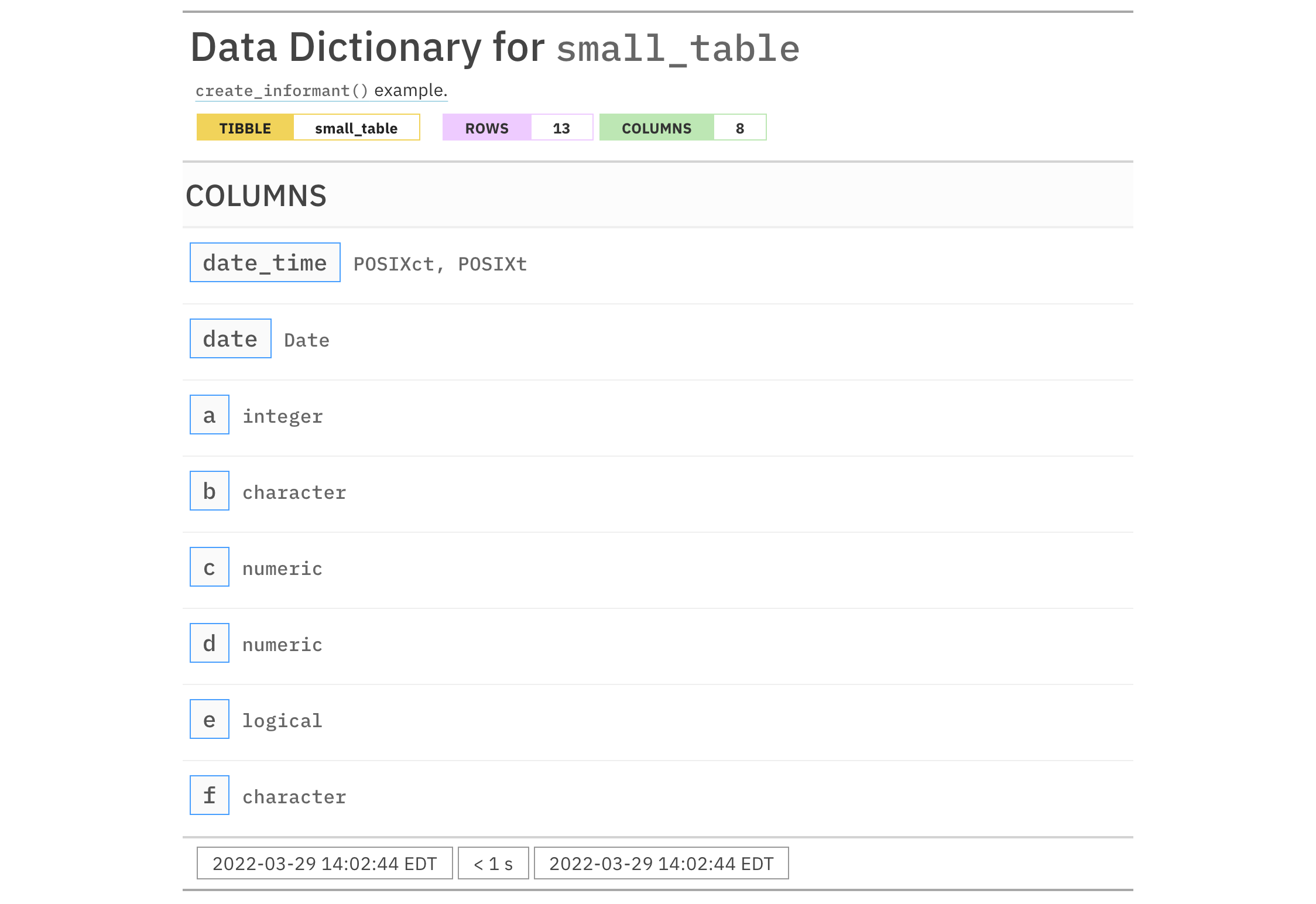

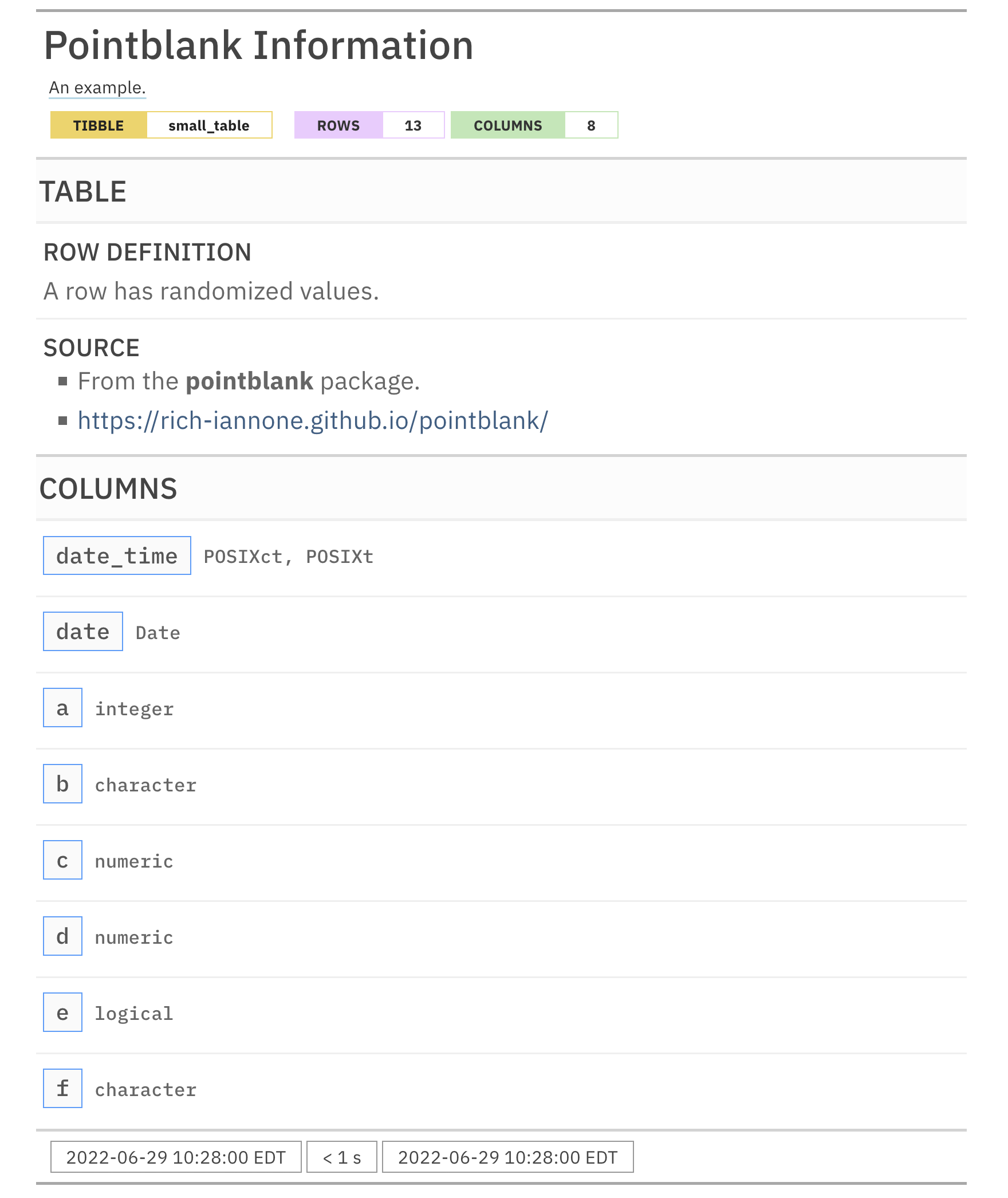

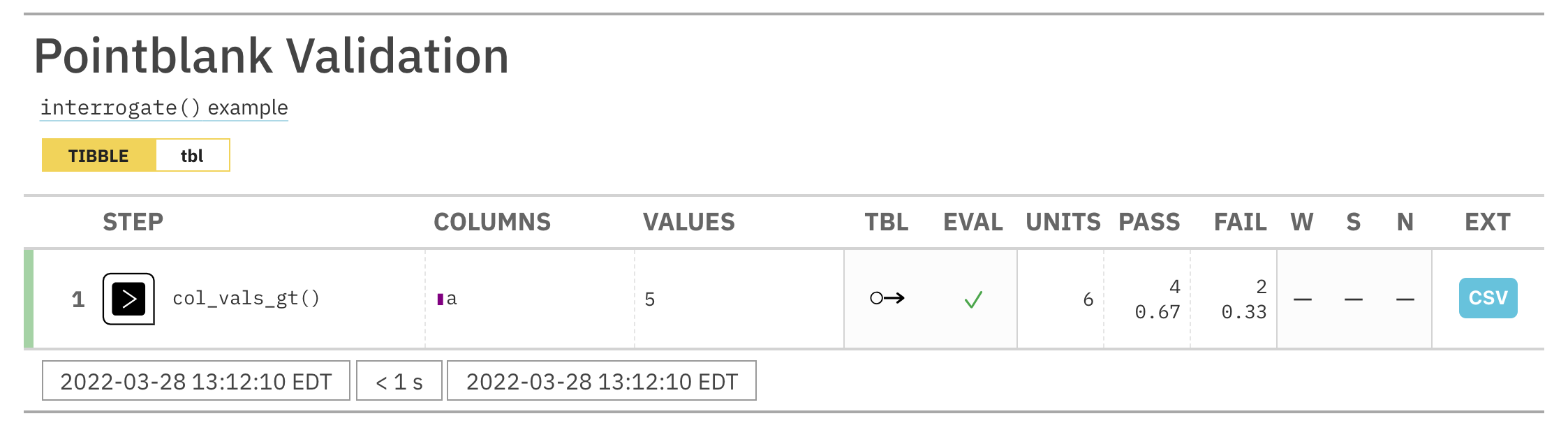

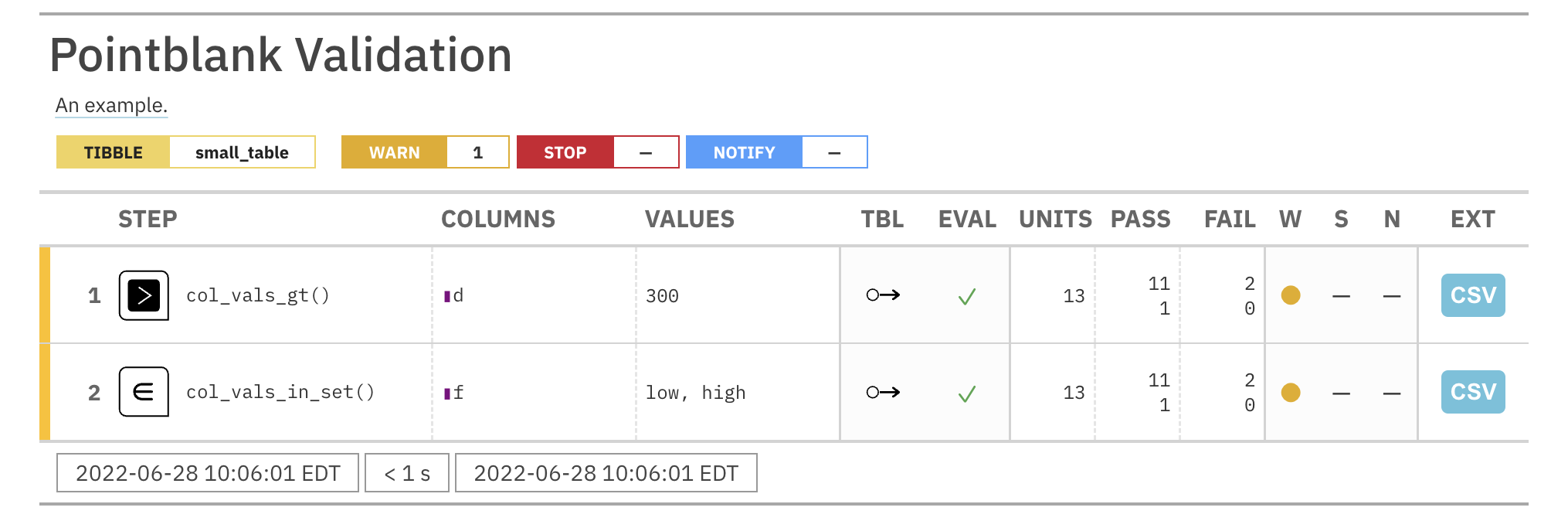

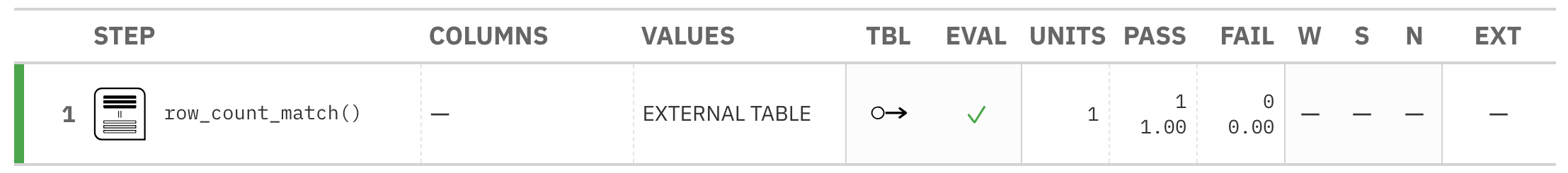

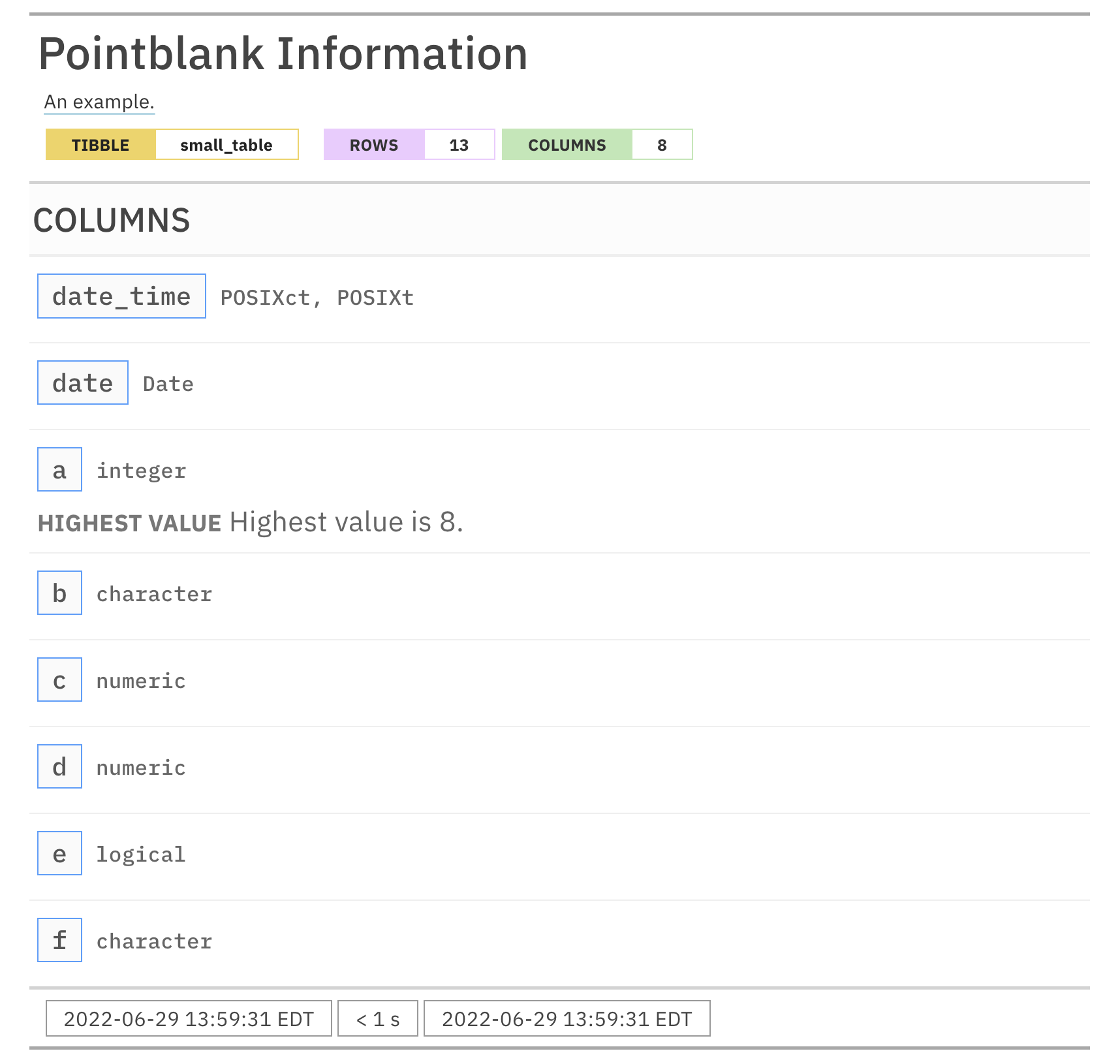

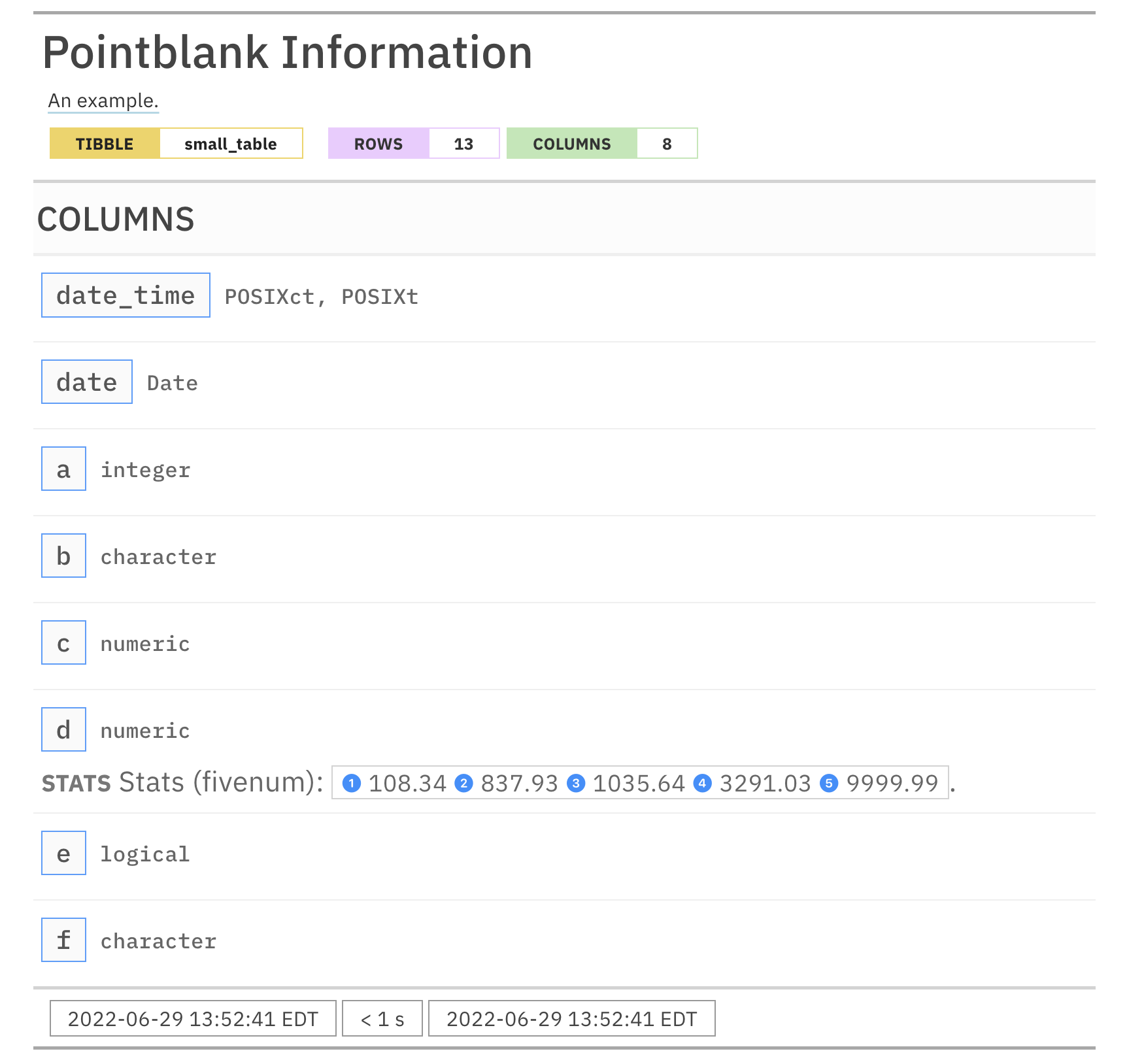

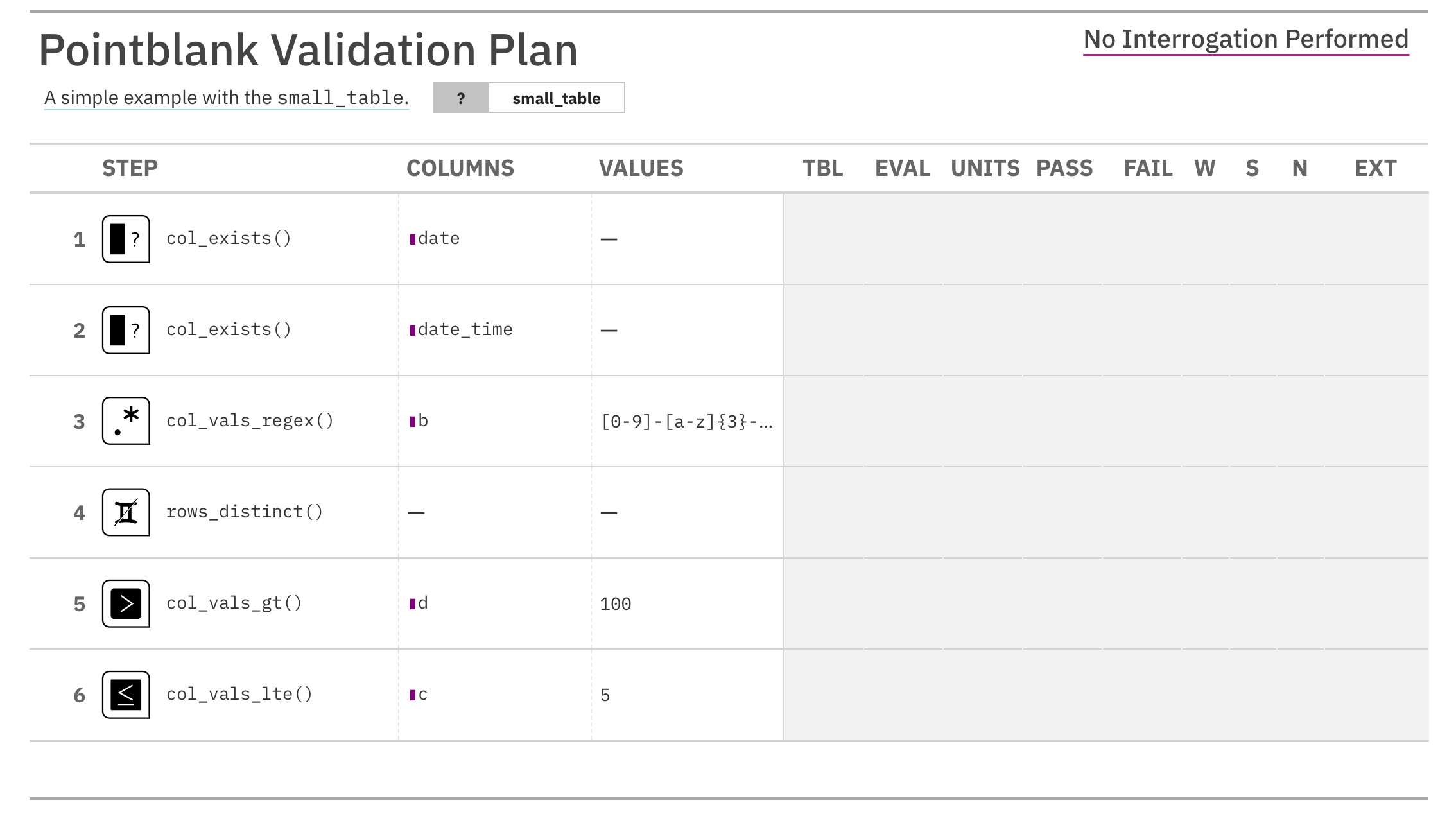

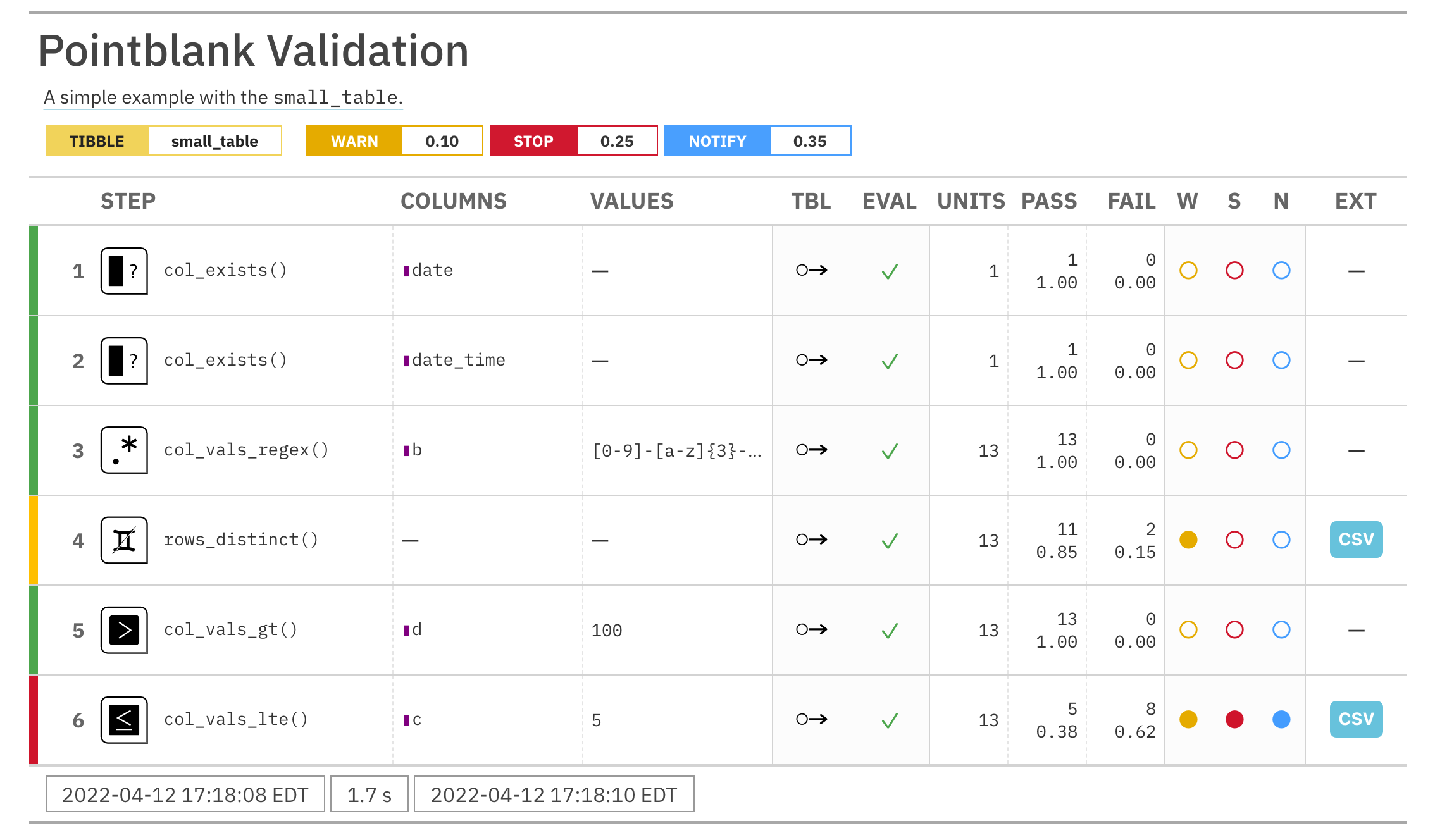

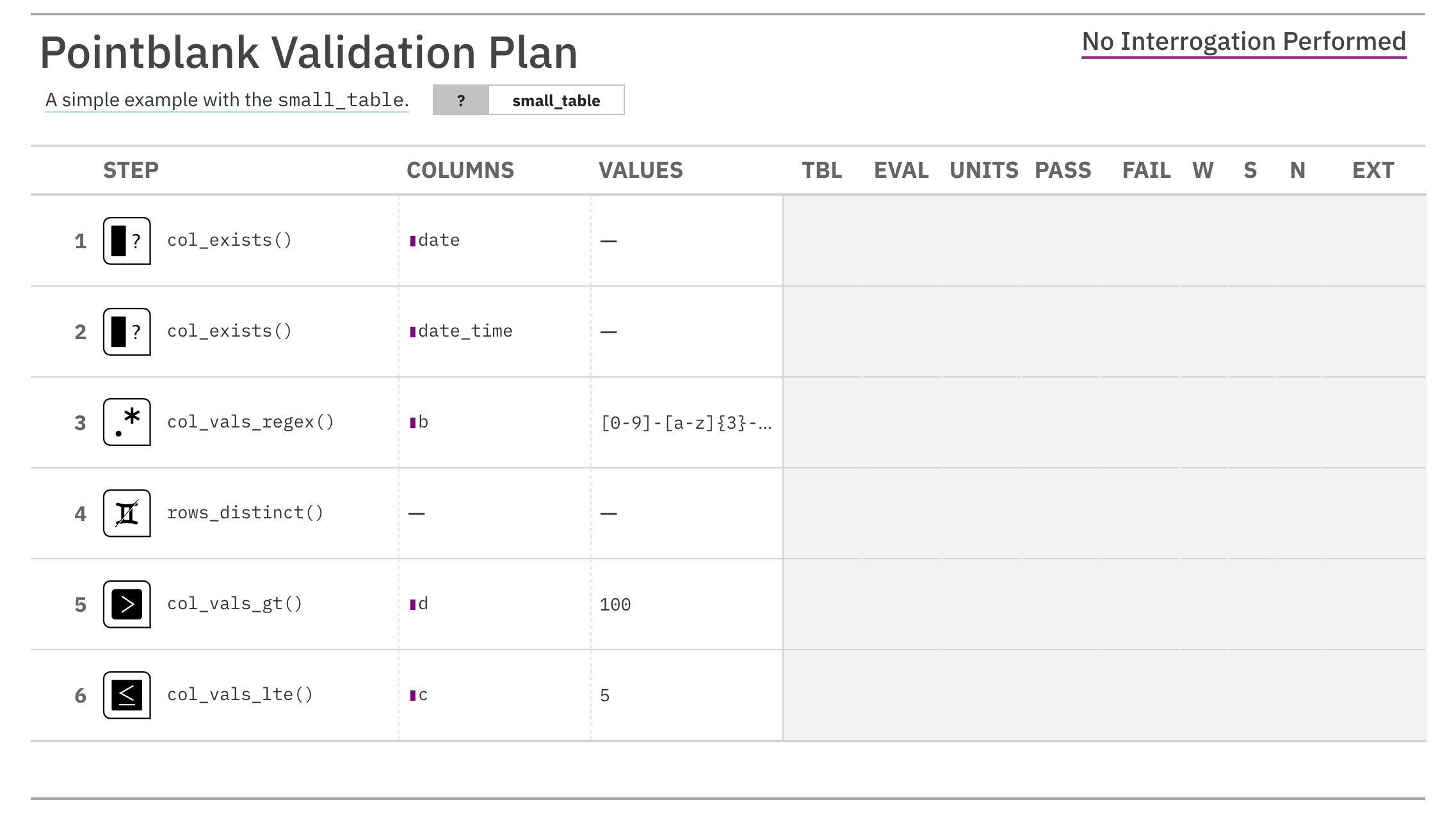

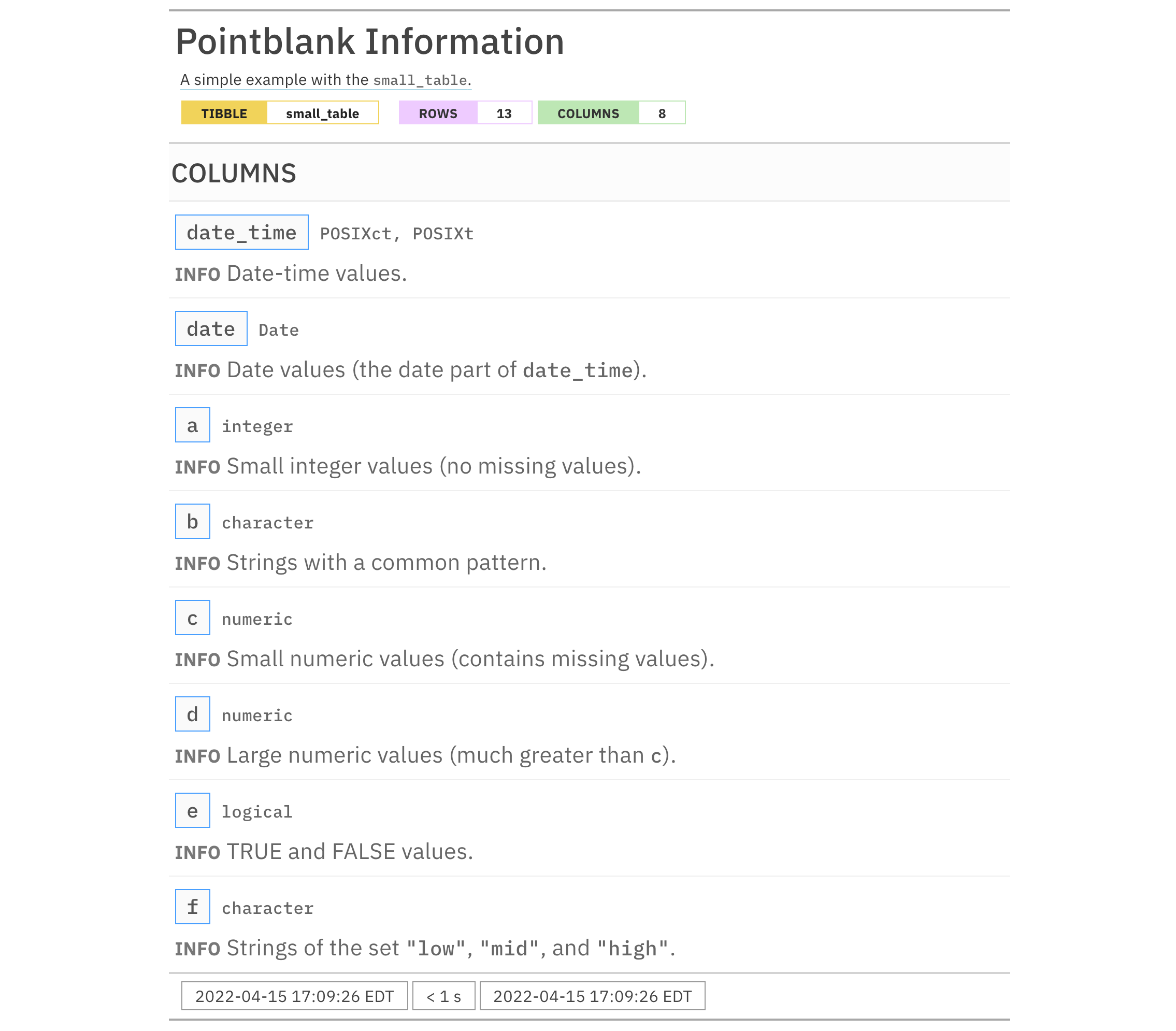

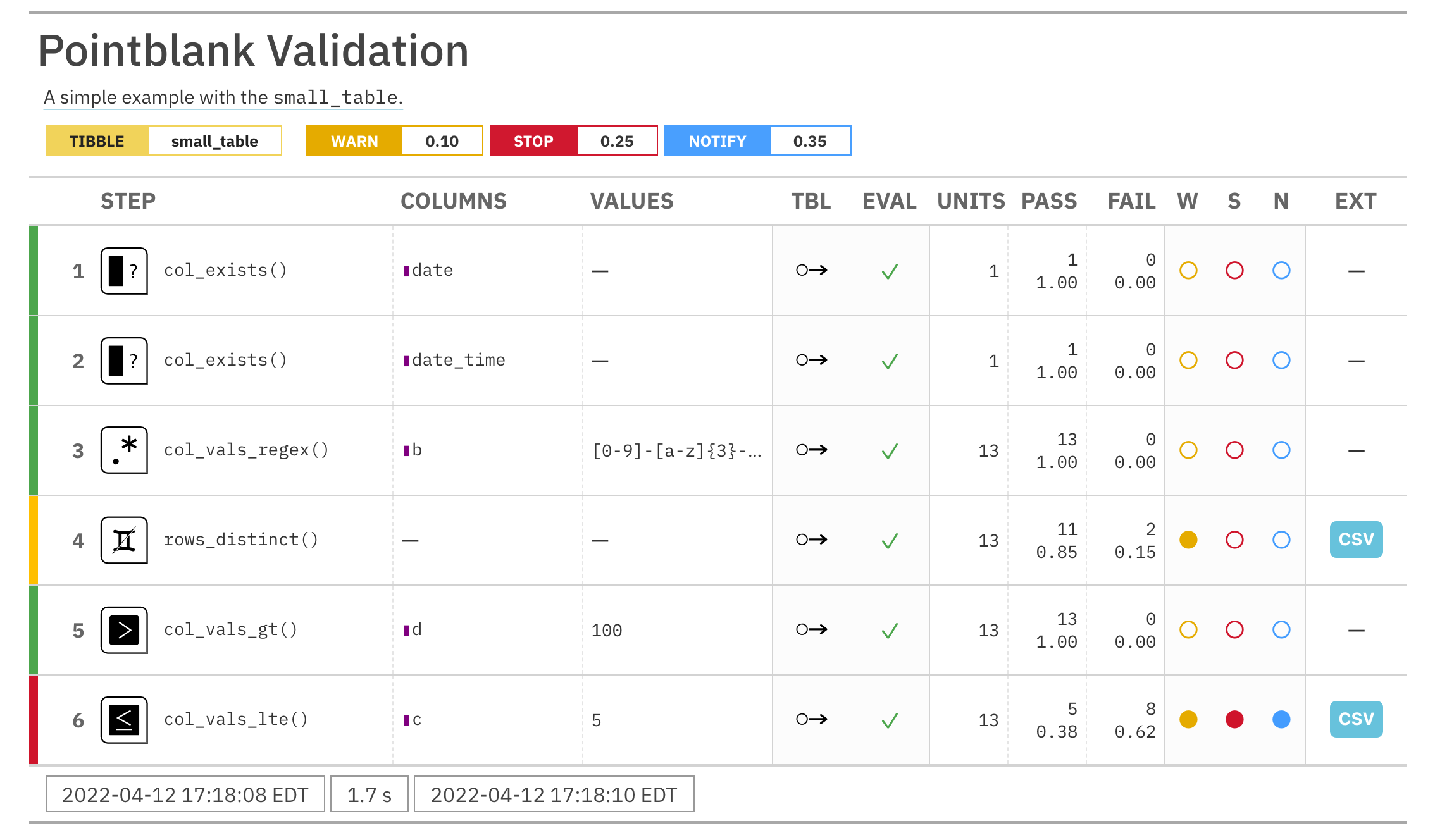

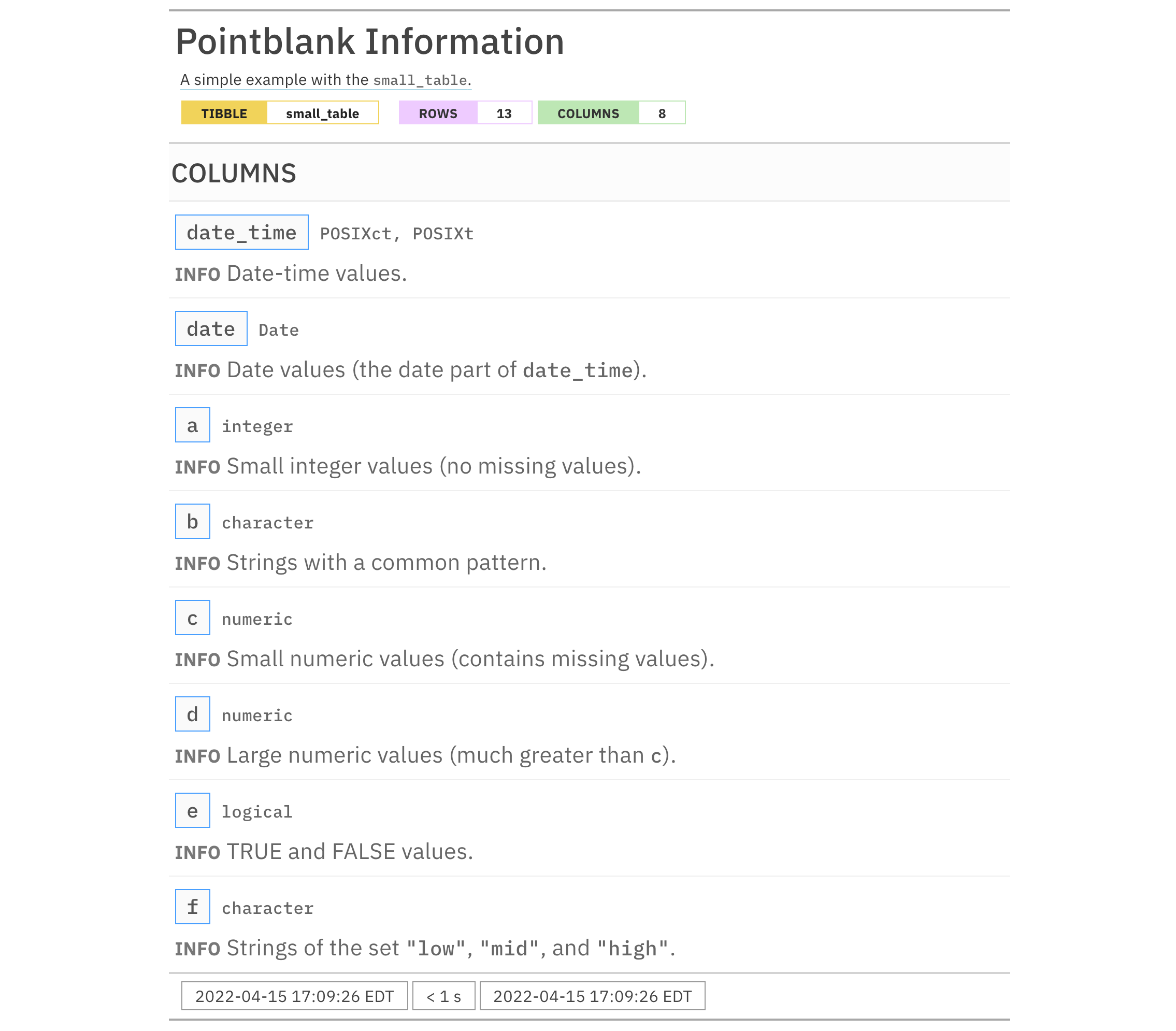

For these examples, we will use the included small_table dataset.

small_table #> # A tibble: 13 x 8 #> date_time date a b c d e f #> <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> <chr> #> 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-345 3 3423. TRUE high #> 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-163 8 10000. TRUE low #> 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-938 3 2343. TRUE high #> 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-903 NA 3892. FALSE mid #> 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-038 7 284. TRUE low #> 6 2016-01-11 06:15:00 2016-01-11 4 2-dhe-923 4 3291. TRUE mid #> 7 2016-01-15 18:46:00 2016-01-15 7 1-knw-093 3 843. TRUE high #> 8 2016-01-17 11:27:00 2016-01-17 4 5-boe-639 2 1036. FALSE low #> 9 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 10 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 11 2016-01-26 20:07:00 2016-01-26 4 2-dmx-010 7 834. TRUE low #> 12 2016-01-28 02:51:00 2016-01-28 2 7-dmx-010 8 108. FALSE low #> 13 2016-01-30 11:23:00 2016-01-30 1 3-dka-303 NA 2230. TRUE high

Create an action_levels object with fractional values for the warn,

stop, and notify states.

al <-

action_levels(

warn_at = 0.2,

stop_at = 0.8,

notify_at = 0.5

)

A summary of settings for the al object is shown by printing it.

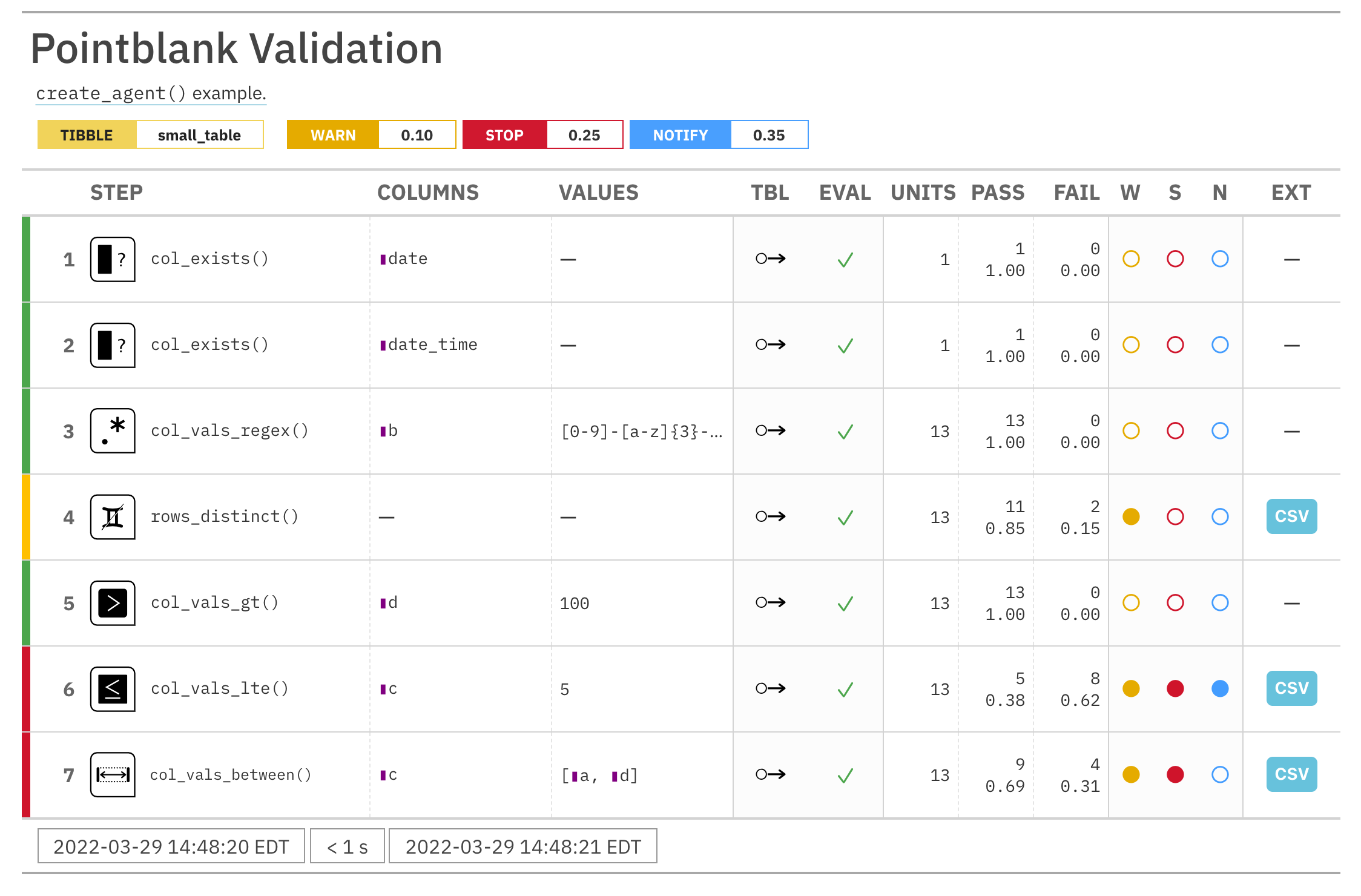

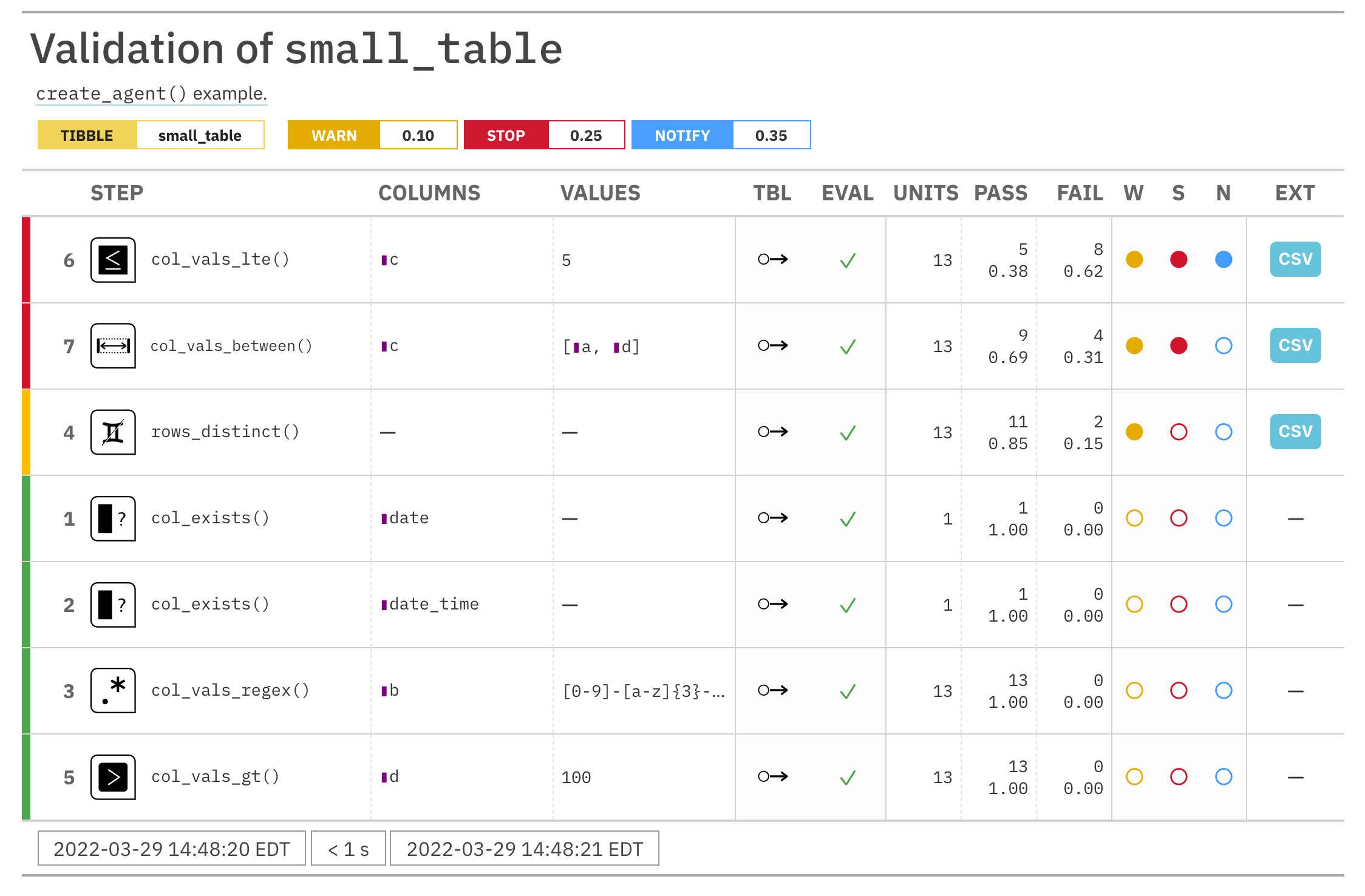

Create a pointblank agent and apply the al object to actions. Add two

validation steps and interrogate the small_table.

agent_1 <-

create_agent(

tbl = small_table,

actions = al

) %>%

col_vals_gt(

columns = a, value = 2

) %>%

col_vals_lt(

columns = d, value = 20000

) %>%

interrogate()

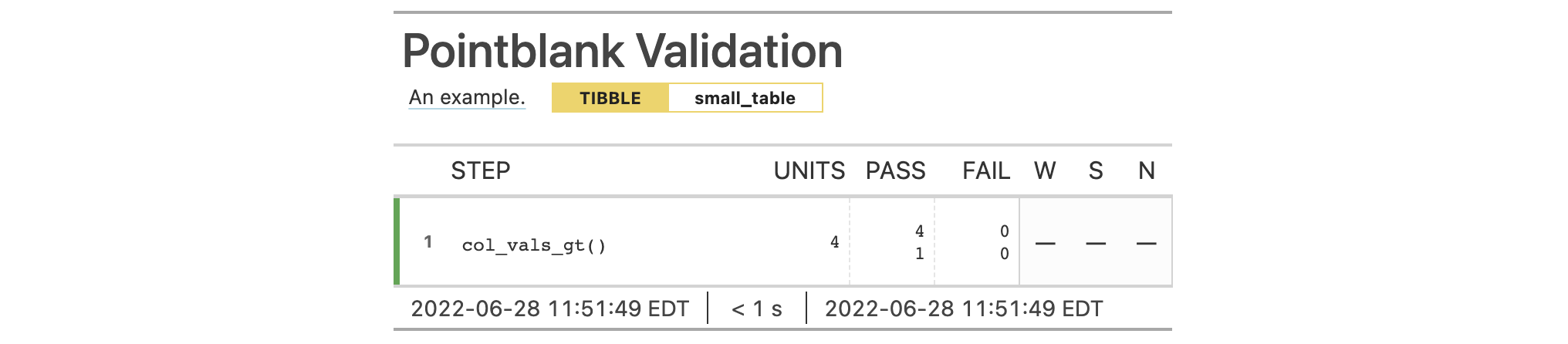

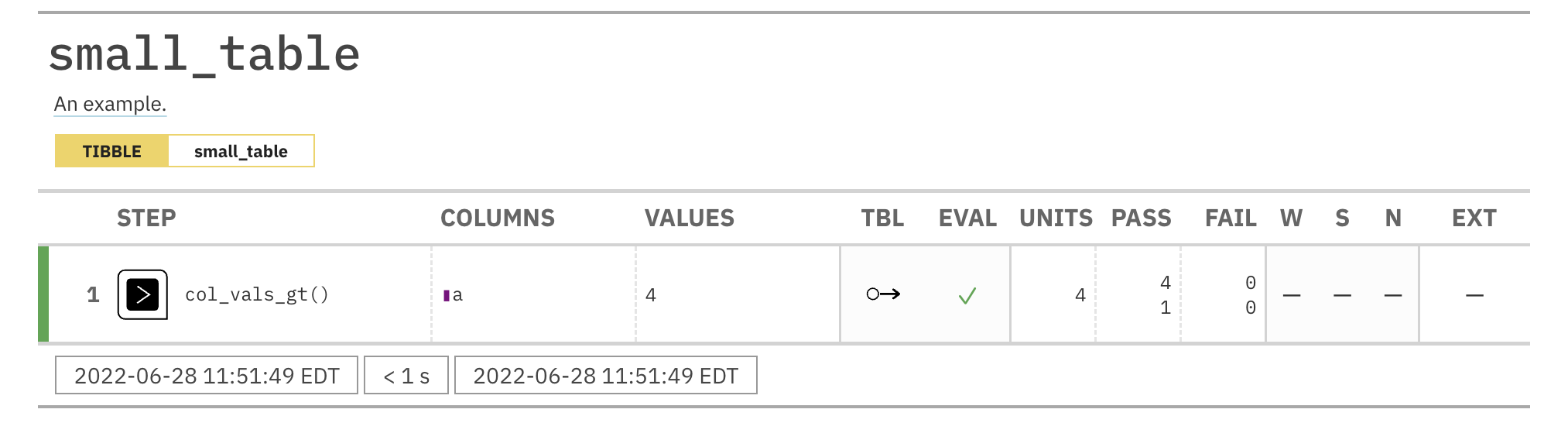

The report from the agent will show that the warn state has been entered

for the first validation step but not the second one. We can confirm this in

the console by inspecting the warn component in the agent's x-list.

x_list <- get_agent_x_list(agent = agent_1) x_list$warn

## [1] TRUE FALSE

Applying the action_levels object to the agent means that all validation

steps will inherit these settings but we can override this by applying

another such object to the validation step instead (this time using the

warn_on_fail() shorthand).

agent_2 <-

create_agent(

tbl = small_table,

actions = al

) %>%

col_vals_gt(

columns = a, value = 2,

actions = warn_on_fail(warn_at = 0.5)

) %>%

col_vals_lt(

columns = d, value = 20000

) %>%

interrogate()

In this case, the first validation step has a less stringent failure

threshold for the warn state and it's high enough that the condition is not

entered. This can be confirmed in the console through inspection of the

x-list warn component.

x_list <- get_agent_x_list(agent = agent_2) x_list$warn

## [1] FALSE FALSE

In the context of using validation functions directly on data (i.e., no

involvement of an agent) we want to trigger warnings and raise errors. The

following will yield a warning if it is executed (returning the small_table

data).

small_table %>%

col_vals_gt(

columns = a, value = 2,

actions = warn_on_fail(warn_at = 2)

)

## # A tibble: 13 × 8 ## date_time date a b c d e ## <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> ## 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-… 3 3423. TRUE ## 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-… 8 10000. TRUE ## 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-… 3 2343. TRUE ## 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-… NA 3892. FALSE ## 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-… 7 284. TRUE ## 6 2016-01-11 06:15:00 2016-01-11 4 2-dhe-… 4 3291. TRUE ## 7 2016-01-15 18:46:00 2016-01-15 7 1-knw-… 3 843. TRUE ## 8 2016-01-17 11:27:00 2016-01-17 4 5-boe-… 2 1036. FALSE ## 9 2016-01-20 04:30:00 2016-01-20 3 5-bce-… 9 838. FALSE ## 10 2016-01-20 04:30:00 2016-01-20 3 5-bce-… 9 838. FALSE ## 11 2016-01-26 20:07:00 2016-01-26 4 2-dmx-… 7 834. TRUE ## 12 2016-01-28 02:51:00 2016-01-28 2 7-dmx-… 8 108. FALSE ## 13 2016-01-30 11:23:00 2016-01-30 1 3-dka-… NA 2230. TRUE ## # … with 1 more variable: f <chr> ## Warning message: ## Exceedance of failed test units where values in `a` should have been > ## `2`. ## The `col_vals_gt()` validation failed beyond the absolute threshold ## level (2). ## * failure level (4) >= failure threshold (2)

With the same pipeline, not supplying anything for actions (it's NULL by

default) will have the same effect as using stop_on_fail(stop_at = 1).

small_table %>% col_vals_gt(columns = a, value = 2)

## Error: Exceedance of failed test units where values in `a` should have ## been > `2`. ## The `col_vals_gt()` validation failed beyond the absolute threshold ## level (1). ## * failure level (4) >= failure threshold (1)

Here's the equivalent set of statements:

small_table %>%

col_vals_gt(

columns = a, value = 2,

actions = stop_on_fail(stop_at = 1)

)

## Error: Exceedance of failed test units where values in `a` should have ## been > `2`. ## The `col_vals_gt()` validation failed beyond the absolute threshold ## level (1). ## * failure level (4) >= failure threshold (1)

This is because the stop_on_fail() call is auto-injected in the default

case (when operating on data) for your convenience. Behind the scenes a

'secret agent' uses 'covert actions': all so you can type less.

1-5

Other Planning and Prep:

create_agent(),

create_informant(),

db_tbl(),

draft_validation(),

file_tbl(),

scan_data(),

tbl_get(),

tbl_source(),

tbl_store(),

validate_rmd()

If certain validation steps need to be activated after the creation of the

validation plan for an agent, use the activate_steps() function. This is

equivalent to using the active = TRUE for the selected validation steps

(active is an argument in all validation functions). This will replace any

function that may have been defined for the active argument during creation

of the targeted validation steps.

activate_steps(agent, i = NULL)activate_steps(agent, i = NULL)

agent |

The pointblank agent object

A pointblank agent object that is commonly created through the use of

the |

i |

A validation step number

The validation step number, which is assigned to each validation step in

the order of definition. If |

A ptblank_agent object.

9-5

For the opposite behavior, use the deactivate_steps() function.

Other Object Ops:

deactivate_steps(),

export_report(),

remove_steps(),

set_tbl(),

x_read_disk(),

x_write_disk()

# Create an agent that has the # `small_table` object as the # target table, add a few inactive # validation steps, and then use # `interrogate()` agent_1 <- create_agent( tbl = small_table, tbl_name = "small_table", label = "An example." ) %>% col_exists( columns = date, active = FALSE ) %>% col_vals_regex( columns = b, regex = "[0-9]-[a-z]{3}-[0-9]{3}", active = FALSE ) %>% interrogate() # In the above, the data is # not actually interrogated # because the `active` setting # was `FALSE` in all steps; we # can selectively change this # with `activate_steps()` agent_2 <- agent_1 %>% activate_steps(i = 1) %>% interrogate()# Create an agent that has the # `small_table` object as the # target table, add a few inactive # validation steps, and then use # `interrogate()` agent_1 <- create_agent( tbl = small_table, tbl_name = "small_table", label = "An example." ) %>% col_exists( columns = date, active = FALSE ) %>% col_vals_regex( columns = b, regex = "[0-9]-[a-z]{3}-[0-9]{3}", active = FALSE ) %>% interrogate() # In the above, the data is # not actually interrogated # because the `active` setting # was `FALSE` in all steps; we # can selectively change this # with `activate_steps()` agent_2 <- agent_1 %>% activate_steps(i = 1) %>% interrogate()

This function helps to affix the current date to a filename. This is useful when writing agent and/or informant objects to disk as part of a continuous process. The date can be in terms of UTC time or the local system time. The date can be affixed either to the end of the filename (before the file extension) or at the beginning with a customizable delimiter.

The x_write_disk(), yaml_write() functions allow for the writing of

pointblank objects to disk. Furthermore the log4r_step() function has

the append_to argument that accepts filenames, and, it's reasonable that a

series of log files could be differentiated by a date component in the naming

scheme. The modification of the filename string takes effect immediately but

not at the time of writing a file to disk. In most cases, especially when

using affix_date() with the aforementioned file-writing functions, the file

timestamps should approximate the time components affixed to the filenames.

affix_date( filename, position = c("end", "start"), format = "%Y-%m-%d", delimiter = "_", utc_time = TRUE )affix_date( filename, position = c("end", "start"), format = "%Y-%m-%d", delimiter = "_", utc_time = TRUE )

filename |

The filename to modify. |

position |

Where to place the formatted date. This could either be at

the |

format |

A |

delimiter |

The delimiter characters to use for separating the date string from the original file name. |

utc_time |

An option for whether to use the current UTC time to

establish the date (the default, with |

A character vector.

Taking the generic "pb_file" name for a file, we add the current date to it

as a suffix.

affix_date(filename = "pb_file")

## [1] "pb_file_2022-04-01"

File extensions won't get in the way:

affix_date(filename = "pb_file.rds")

## [1] "pb_file_2022-04-01.rds"

The date can be used as a prefix.

affix_date( filename = "pb_file", position = "start" )

## [1] "2022-04-01_pb_file"

The date pattern can be changed and so can the delimiter.

affix_date( filename = "pb_file.yml", format = "%Y%m%d", delimiter = "-" )

## [1] "pb_file-20220401.yml"

We can use a file-naming convention involving dates when writing output files

immediately after interrogating. This is just one example (any workflow

involving a filename argument is applicable). It's really advantageous to

use date-based filenames when interrogating directly from YAML in a scheduled

process.

yaml_agent_interrogate(

filename = system.file(

"yaml", "agent-small_table.yml",

package = "pointblank"

)

) %>%

x_write_disk(

filename = affix_date(

filename = "small_table_agent.rds",

delimiter = "-"

),

keep_tbl = TRUE,

keep_extracts = TRUE

)

In the above, we used the written-to-disk agent (The

"agent-small_table.yml" YAML file) for an interrogation via

yaml_agent_interrogate(). Then, the results were written to disk as an RDS

file. In the filename argument of x_write_disk(), the affix_date()

function was used to ensure that a daily run would produce a file whose name

indicates the day of execution.

13-3

The affix_datetime() function provides the same features except it

produces a datetime string by default.

Other Utility and Helper Functions:

affix_datetime(),

col_schema(),

from_github(),

has_columns(),

stop_if_not()

This function helps to affix the current datetime to a filename. This is

useful when writing agent and/or informant objects to disk as part of a

continuous process. The datetime string can be based on the current UTC time

or the local system time. The datetime can be affixed either to the end of

the filename (before the file extension) or at the beginning with a

customizable delimiter. Optionally, the time zone information can be

included. If the datetime is based on the local system time, the user system

time zone is shown with the format <time>(+/-)hhmm. If using UTC time, then

the <time>Z format is adopted.

The x_write_disk(), yaml_write() functions allow for the writing of

pointblank objects to disk. The modification of the filename string takes

effect immediately but not at the time of writing a file to disk. In most

cases, especially when using affix_datetime() with the aforementioned

file-writing functions, the file timestamps should approximate the time

components affixed to the filenames.

affix_datetime( filename, position = c("end", "start"), format = "%Y-%m-%d_%H-%M-%S", delimiter = "_", utc_time = TRUE, add_tz = FALSE )affix_datetime( filename, position = c("end", "start"), format = "%Y-%m-%d_%H-%M-%S", delimiter = "_", utc_time = TRUE, add_tz = FALSE )

filename |

The filename to modify. |

position |

Where to place the formatted datetime. This could either be

at the |

format |

A |

delimiter |

The delimiter characters to use for separating the datetime string from the original file name. |

utc_time |

An option for whether to use the current UTC time to

establish the datetime (the default, with |

add_tz |

Should the time zone (as an offset from UTC) be provided? If

|

A character vector.

Taking the generic "pb_file" name for a file, we add the current datetime

to it as a suffix.

affix_datetime(filename = "pb_file")

## [1] "pb_file_2022-04-01_00-32-53"

File extensions won't get in the way:

affix_datetime(filename = "pb_file.rds")

## [1] "pb_file_2022-04-01_00-32-53.rds"

The datetime can be used as a prefix.

affix_datetime( filename = "pb_file", position = "start" )

## [1] "2022-04-01_00-32-53_pb_file"

The datetime pattern can be changed and so can the delimiter.

affix_datetime( filename = "pb_file.yml", format = "%Y%m%d_%H%M%S", delimiter = "-" )

## [1] "pb_file-20220401_003253.yml"

Time zone information can be included. By default, all datetimes are given in the UTC time zone.

affix_datetime( filename = "pb_file.yml", add_tz = TRUE )

## [1] "pb_file_2022-04-01_00-32-53Z.yml"

We can use the system's local time zone with utc_time = FALSE.

affix_datetime( filename = "pb_file.yml", utc_time = FALSE, add_tz = TRUE )

## [1] "pb_file_2022-03-31_20-32-53-0400.yml"

We can use a file-naming convention involving datetimes when writing output

files immediately after interrogating. This is just one example (any workflow

involving a filename argument is applicable). It's really advantageous to

use datetime-based filenames when interrogating directly from YAML in a

scheduled process, especially if multiple validation runs per day are being

executed on the same target table.

yaml_agent_interrogate(

filename = system.file(

"yaml", "agent-small_table.yml",

package = "pointblank"

)

) %>%

x_write_disk(

filename = affix_datetime(

filename = "small_table_agent.rds",

delimiter = "-"

),

keep_tbl = TRUE,

keep_extracts = TRUE

)

In the above, we used the written-to-disk agent (The

"agent-small_table.yml" YAML file) for an interrogation via

yaml_agent_interrogate(). Then, the results were written to disk as an RDS

file. In the filename argument of x_write_disk(), the affix_datetime()

function was used to ensure that frequent runs would produce files whose

names indicate the day and time of execution.

13-4

The affix_date() function provides the same features except it

produces a date string by default.

Other Utility and Helper Functions:

affix_date(),

col_schema(),

from_github(),

has_columns(),

stop_if_not()

Given an agent's validation plan that had undergone interrogation via

interrogate(), did every single validation step result in zero failing

test units? Using the all_passed() function will let us know whether that's

TRUE or not.

all_passed(agent, i = NULL)all_passed(agent, i = NULL)

agent |

The pointblank agent object

A pointblank agent object that is commonly created through the use of

the |

i |

Validation step numbers

A vector of validation step numbers. These values are assigned to each

validation step by pointblank in the order of definition. If |

The all_passed() function provides a single logical value based on an

interrogation performed in the agent-based workflow. For very large-scale

validation (where data quality is a known issue, and is perhaps something to

be tamed over time) this function is likely to be less useful since it is

quite stringent (all test units must pass across all validation steps).

Should there be a requirement for logical values produced from validation, a

more flexible alternative is in using the test (test_*()) variants of the

validation functions. Each of those produce a single logical value and each

and have a threshold option for failure levels. Another option is to

utilize post-interrogation objects within the agent's x-list (obtained by

using the get_agent_x_list() function). This allows for many possibilities

in producing a single logical value from an interrogation.

A logical value.

Create a simple table with a column of numerical values.

tbl <- dplyr::tibble(a = c(4, 5, 7, 8)) tbl #> # A tibble: 4 x 1 #> a #> <dbl> #> 1 4 #> 2 5 #> 3 7 #> 4 8

Validate that values in column a are always greater than 4.

agent <- create_agent(tbl = tbl) %>% col_vals_gt(columns = a, value = 3) %>% col_vals_lte(columns = a, value = 10) %>% col_vals_increasing(columns = a) %>% interrogate()

Determine if these column validations have all passed by using all_passed()

(they do).

all_passed(agent = agent)

#> [1] TRUE

8-4

Other Post-interrogation:

get_agent_x_list(),

get_data_extracts(),

get_sundered_data(),

write_testthat_file()

The col_count_match() validation function, the expect_col_count_match()

expectation function, and the test_col_count_match() test function all

check whether the column count in the target table matches that of a

comparison table. The validation function can be used directly on a data

table or with an agent object (technically, a ptblank_agent object)

whereas the expectation and test functions can only be used with a data

table. As a validation step or as an expectation, there is a single test unit

that hinges on whether the column counts for the two tables are the same

(after any preconditions have been applied).

col_count_match( x, count, preconditions = NULL, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_count_match(object, count, preconditions = NULL, threshold = 1) test_col_count_match(object, count, preconditions = NULL, threshold = 1)col_count_match( x, count, preconditions = NULL, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_count_match(object, count, preconditions = NULL, threshold = 1) test_col_count_match(object, count, preconditions = NULL, threshold = 1)

x |

A pointblank agent or a data table

A data frame, tibble ( |

count |

The count comparison

Either a literal value for the number of columns, or, a table to compare

against the target table in terms of column count values. If supplying a

comparison table, it can either be a table object such as a data frame, a

tibble, a |

preconditions |

Input table modification prior to validation

An optional expression for mutating the input table before proceeding with

the validation. This can either be provided as a one-sided R formula using

a leading |

actions |

Thresholds and actions for different states

A list containing threshold levels so that the validation step can react

accordingly when exceeding the set levels for different states. This is to

be created with the |

step_id |

Manual setting of the step ID value

One or more optional identifiers for the single or multiple validation

steps generated from calling a validation function. The use of step IDs

serves to distinguish validation steps from each other and provide an

opportunity for supplying a more meaningful label compared to the step

index. By default this is |

label |

Optional label for the validation step

Optional label for the validation step. This label appears in the agent report and, for the best appearance, it should be kept quite short. See the Labels section for more information. |

brief |

Brief description for the validation step

A brief is a short, text-based description for the validation step. If

nothing is provided here then an autobrief is generated by the agent,

using the language provided in |

active |

Is the validation step active?

A logical value indicating whether the validation step should be active. If

the validation function is working with an agent, |

object |

A data table for expectations or tests

A data frame, tibble ( |

threshold |

The failure threshold

A simple failure threshold value for use with the expectation ( |

For the validation function, the return value is either a

ptblank_agent object or a table object (depending on whether an agent

object or a table was passed to x). The expectation function invisibly

returns its input but, in the context of testing data, the function is

called primarily for its potential side-effects (e.g., signaling failure).

The test function returns a logical value.

The types of data tables that are officially supported are:

data frames (data.frame) and tibbles (tbl_df)

Spark DataFrames (tbl_spark)

the following database tables (tbl_dbi):

PostgreSQL tables (using the RPostgres::Postgres() as driver)

MySQL tables (with RMySQL::MySQL())

Microsoft SQL Server tables (via odbc)

BigQuery tables (using bigrquery::bigquery())

DuckDB tables (through duckdb::duckdb())

SQLite (with RSQLite::SQLite())

Other database tables may work to varying degrees but they haven't been formally tested (so be mindful of this when using unsupported backends with pointblank).

Providing expressions as preconditions means pointblank will preprocess

the target table during interrogation as a preparatory step. It might happen

that this particular validation requires some operation on the target table

before the column count comparison takes place. Using preconditions can be

useful at times since since we can develop a large validation plan with a

single target table and make minor adjustments to it, as needed, along the

way.

The table mutation is totally isolated in scope to the validation step(s)

where preconditions is used. Using dplyr code is suggested here since

the statements can be translated to SQL if necessary (i.e., if the target

table resides in a database). The code is most easily supplied as a one-sided

R formula (using a leading ~). In the formula representation, the .

serves as the input data table to be transformed. Alternatively, a function

could instead be supplied.

Often, we will want to specify actions for the validation. This argument,

present in every validation function, takes a specially-crafted list object

that is best produced by the action_levels() function. Read that function's

documentation for the lowdown on how to create reactions to above-threshold

failure levels in validation. The basic gist is that you'll want at least a

single threshold level (specified as either the fraction of test units

failed, or, an absolute value), often using the warn_at argument. Using

action_levels(warn_at = 1) or action_levels(stop_at = 1) are good choices

depending on the situation (the first produces a warning, the other

stop()s).

label may be a single string or a character vector that matches the number

of expanded steps. label also supports {glue} syntax and exposes the

following dynamic variables contextualized to the current step:

"{.step}": The validation step name

The glue context also supports ordinary expressions for further flexibility

(e.g., "{toupper(.step)}") as long as they return a length-1 string.

Want to describe this validation step in some detail? Keep in mind that this

is only useful if x is an agent. If that's the case, brief the agent

with some text that fits. Don't worry if you don't want to do it. The

autobrief protocol is kicked in when brief = NULL and a simple brief will

then be automatically generated.

A pointblank agent can be written to YAML with yaml_write() and the

resulting YAML can be used to regenerate an agent (with yaml_read_agent())

or interrogate the target table (via yaml_agent_interrogate()). When

col_count_match() is represented in YAML (under the top-level steps key

as a list member), the syntax closely follows the signature of the validation

function. Here is an example of how a complex call of col_count_match() as

a validation step is expressed in R code and in the corresponding YAML

representation.

R statement:

agent %>%

col_count_match(

count = ~ file_tbl(

file = from_github(

file = "sj_all_revenue_large.rds",

repo = "rich-iannone/intendo",

subdir = "data-large"

)

),

preconditions = ~ . %>% dplyr::filter(a < 10),

actions = action_levels(warn_at = 0.1, stop_at = 0.2),

label = "The `col_count_match()` step.",

active = FALSE

)

YAML representation:

steps:

- col_count_match:

count: ~ file_tbl(

file = from_github(

file = "sj_all_revenue_large.rds",

repo = "rich-iannone/intendo",

subdir = "data-large"

)

)

preconditions: ~. %>% dplyr::filter(a < 10)

actions:

warn_fraction: 0.1

stop_fraction: 0.2

label: The `col_count_match()` step.

active: false

In practice, both of these will often be shorter. Arguments with default

values won't be written to YAML when using yaml_write() (though it is

acceptable to include them with their default when generating the YAML by

other means). It is also possible to preview the transformation of an agent

to YAML without any writing to disk by using the yaml_agent_string()

function.

Create a simple table with three columns and three rows of values:

tbl <-

dplyr::tibble(

a = c(5, 7, 6),

b = c(7, 1, 0),

c = c(1, 1, 1)

)

tbl

#> # A tibble: 3 x 3

#> a b c

#> <dbl> <dbl> <dbl>

#> 1 5 7 1

#> 2 7 1 1

#> 3 6 0 1

Create a second table which is quite different but has the same number of

columns as tbl.

tbl_2 <-

dplyr::tibble(

e = c("a", NA, "a", "c"),

f = c(2.6, 1.2, 0, NA),

g = c("f", "g", "h", "i")

)

tbl_2

#> # A tibble: 4 x 3

#> e f g

#> <chr> <dbl> <chr>

#> 1 a 2.6 f

#> 2 <NA> 1.2 g

#> 3 a 0 h

#> 4 c NA i

We'll use these tables with the different function variants.

agent with validation functions and then interrogate()

Validate that the count of columns in the target table (tbl) matches that

of the comparison table (tbl_2).

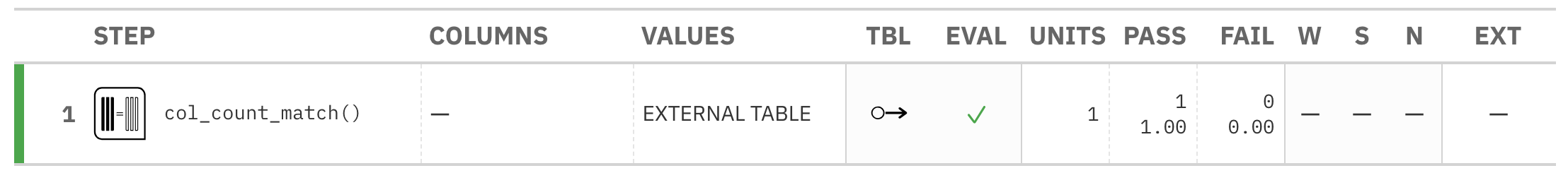

agent <- create_agent(tbl = tbl) %>% col_count_match(count = tbl_2) %>% interrogate()

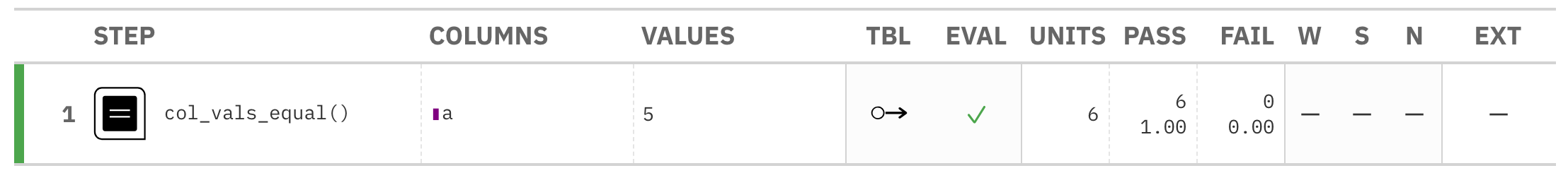

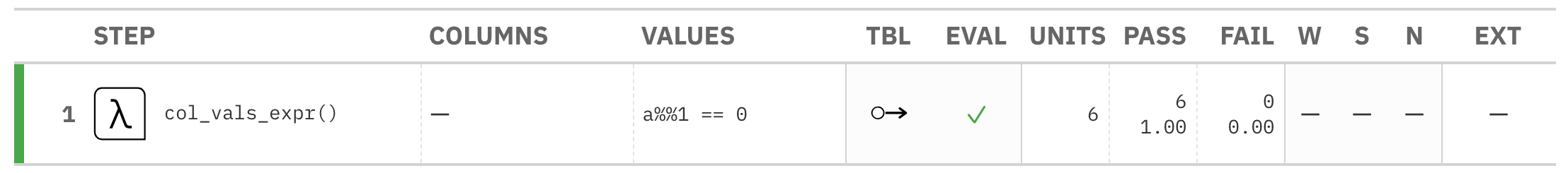

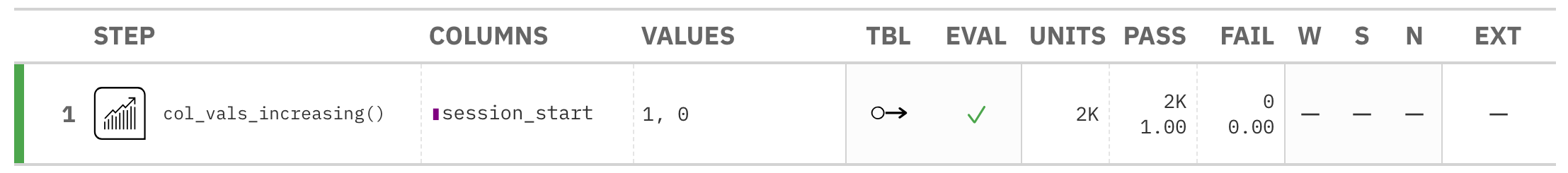

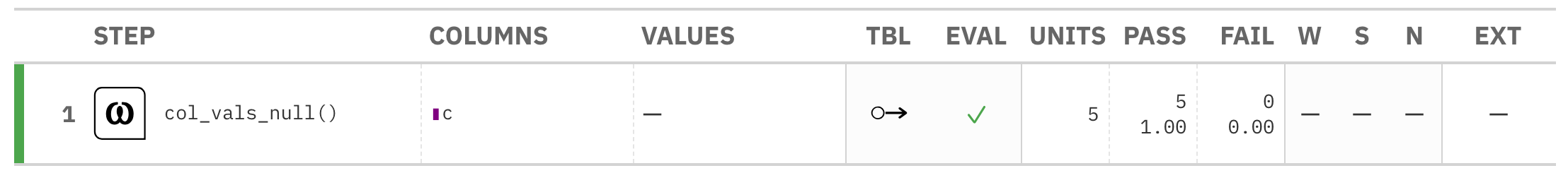

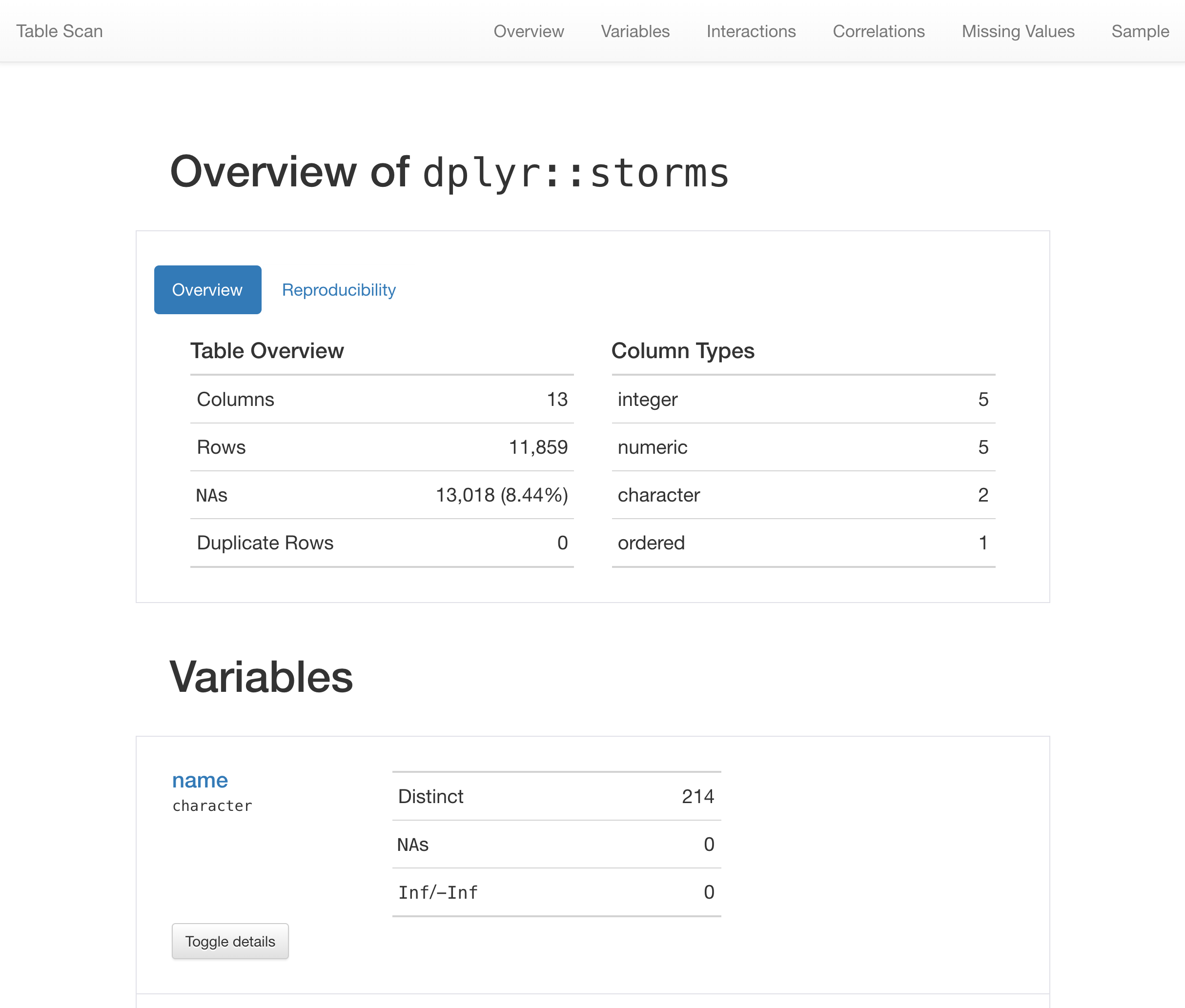

Printing the agent in the console shows the validation report in the

Viewer. Here is an excerpt of validation report, showing the single entry

that corresponds to the validation step demonstrated here.

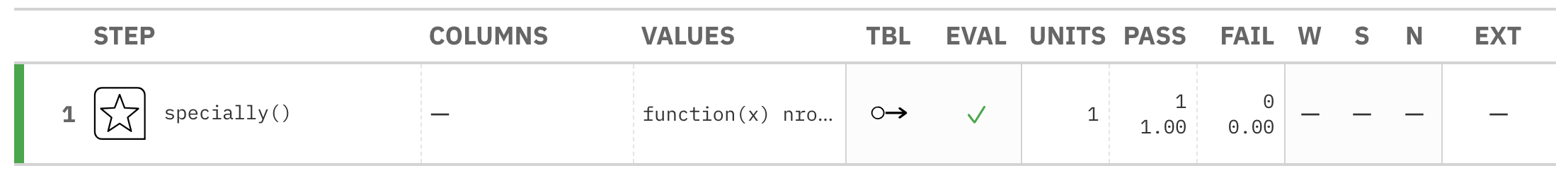

agent)This way of using validation functions acts as a data filter: data is passed

through but should stop() if there is a single test unit failing. The

behavior of side effects can be customized with the actions option.

tbl %>% col_count_match(count = tbl_2) #> # A tibble: 3 x 3 #> a b c #> <dbl> <dbl> <dbl> #> 1 5 7 1 #> 2 7 1 1 #> 3 6 0 1

With the expect_*() form, we would typically perform one validation at a

time. This is primarily used in testthat tests.

expect_col_count_match(tbl, count = tbl_2)

With the test_*() form, we should get a single logical value returned to

us.

tbl %>% test_col_count_match(count = 3) #> [1] TRUE

2-32

Other validation functions:

col_exists(),

col_is_character(),

col_is_date(),

col_is_factor(),

col_is_integer(),

col_is_logical(),

col_is_numeric(),

col_is_posix(),

col_schema_match(),

col_vals_between(),

col_vals_decreasing(),

col_vals_equal(),

col_vals_expr(),

col_vals_gt(),

col_vals_gte(),

col_vals_in_set(),

col_vals_increasing(),

col_vals_lt(),

col_vals_lte(),

col_vals_make_set(),

col_vals_make_subset(),

col_vals_not_between(),

col_vals_not_equal(),

col_vals_not_in_set(),

col_vals_not_null(),

col_vals_null(),

col_vals_regex(),

col_vals_within_spec(),

conjointly(),

row_count_match(),

rows_complete(),

rows_distinct(),

serially(),

specially(),

tbl_match()

The col_exists() validation function, the expect_col_exists() expectation

function, and the test_col_exists() test function all check whether one or

more columns exist in the target table. The only requirement is specification

of the column names. The validation function can be used directly on a data

table or with an agent object (technically, a ptblank_agent object)

whereas the expectation and test functions can only be used with a data

table. Each validation step or expectation will operate over a single test

unit, which is whether the column exists or not.

col_exists( x, columns = NULL, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_exists(object, columns, threshold = 1) test_col_exists(object, columns, threshold = 1)col_exists( x, columns = NULL, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_exists(object, columns, threshold = 1) test_col_exists(object, columns, threshold = 1)

x |

A pointblank agent or a data table

A data frame, tibble ( |

columns |

The target columns

A column-selecting expression, as one would use inside |

actions |

Thresholds and actions for different states

A list containing threshold levels so that the validation step can react

accordingly when exceeding the set levels for different states. This is to

be created with the |

step_id |

Manual setting of the step ID value

One or more optional identifiers for the single or multiple validation

steps generated from calling a validation function. The use of step IDs

serves to distinguish validation steps from each other and provide an

opportunity for supplying a more meaningful label compared to the step

index. By default this is |

label |

Optional label for the validation step

Optional label for the validation step. This label appears in the agent report and, for the best appearance, it should be kept quite short. See the Labels section for more information. |

brief |

Brief description for the validation step

A brief is a short, text-based description for the validation step. If

nothing is provided here then an autobrief is generated by the agent,

using the language provided in |

active |

Is the validation step active?

A logical value indicating whether the validation step should be active. If

the validation function is working with an agent, |

object |

A data table for expectations or tests

A data frame, tibble ( |

threshold |

The failure threshold

A simple failure threshold value for use with the expectation ( |

For the validation function, the return value is either a

ptblank_agent object or a table object (depending on whether an agent

object or a table was passed to x). The expectation function invisibly

returns its input but, in the context of testing data, the function is

called primarily for its potential side-effects (e.g., signaling failure).

The test function returns a logical value.

The types of data tables that are officially supported are:

data frames (data.frame) and tibbles (tbl_df)

Spark DataFrames (tbl_spark)

the following database tables (tbl_dbi):

PostgreSQL tables (using the RPostgres::Postgres() as driver)

MySQL tables (with RMySQL::MySQL())

Microsoft SQL Server tables (via odbc)

BigQuery tables (using bigrquery::bigquery())

DuckDB tables (through duckdb::duckdb())

SQLite (with RSQLite::SQLite())

Other database tables may work to varying degrees but they haven't been formally tested (so be mindful of this when using unsupported backends with pointblank).

columns may be a single column (as symbol a or string "a") or a vector

of columns (c(a, b, c) or c("a", "b", "c")). {tidyselect} helpers

are also supported, such as contains("date") and where(is.double). If

passing an external vector of columns, it should be wrapped in all_of().

When multiple columns are selected by columns, the result will be an

expansion of validation steps to that number of columns (e.g.,

c(col_a, col_b) will result in the entry of two validation steps).

Previously, columns could be specified in vars(). This continues to work,

but c() offers the same capability and supersedes vars() in columns.

Often, we will want to specify actions for the validation. This argument,

present in every validation function, takes a specially-crafted list object

that is best produced by the action_levels() function. Read that function's

documentation for the lowdown on how to create reactions to above-threshold

failure levels in validation. The basic gist is that you'll want at least a

single threshold level (specified as either the fraction of test units

failed, or, an absolute value), often using the warn_at argument. Using

action_levels(warn_at = 1) or action_levels(stop_at = 1) are good choices

depending on the situation (the first produces a warning, the other

stop()s).

label may be a single string or a character vector that matches the number

of expanded steps. label also supports {glue} syntax and exposes the

following dynamic variables contextualized to the current step:

"{.step}": The validation step name

"{.col}": The current column name

The glue context also supports ordinary expressions for further flexibility

(e.g., "{toupper(.step)}") as long as they return a length-1 string.

Want to describe this validation step in some detail? Keep in mind that this

is only useful if x is an agent. If that's the case, brief the agent

with some text that fits. Don't worry if you don't want to do it. The

autobrief protocol is kicked in when brief = NULL and a simple brief will

then be automatically generated.

A pointblank agent can be written to YAML with yaml_write() and the

resulting YAML can be used to regenerate an agent (with yaml_read_agent())

or interrogate the target table (via yaml_agent_interrogate()). When

col_exists() is represented in YAML (under the top-level steps key as a

list member), the syntax closely follows the signature of the validation

function. Here is an example of how a complex call of col_exists() as a

validation step is expressed in R code and in the corresponding YAML

representation.

R statement:

agent %>%

col_exists(

columns = a,

actions = action_levels(warn_at = 0.1, stop_at = 0.2),

label = "The `col_exists()` step.",

active = FALSE

)

YAML representation:

steps:

- col_exists:

columns: c(a)

actions:

warn_fraction: 0.1

stop_fraction: 0.2

label: The `col_exists()` step.

active: false

In practice, both of these will often be shorter as only the columns

argument requires a value. Arguments with default values won't be written to

YAML when using yaml_write() (though it is acceptable to include them with

their default when generating the YAML by other means). It is also possible

to preview the transformation of an agent to YAML without any writing to disk

by using the yaml_agent_string() function.

For all examples here, we'll use a simple table with two columns: a and

b.

tbl <-

dplyr::tibble(

a = c(5, 7, 6, 5, 8, 7),

b = c(7, 1, 0, 0, 0, 3)

)

tbl

#> # A tibble: 6 x 2

#> a b

#> <dbl> <dbl>

#> 1 5 7

#> 2 7 1

#> 3 6 0

#> 4 5 0

#> 5 8 0

#> 6 7 3

We'll use this table with the different function variants.

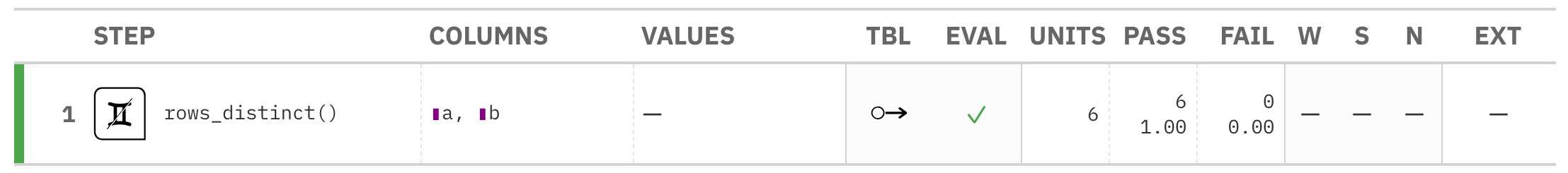

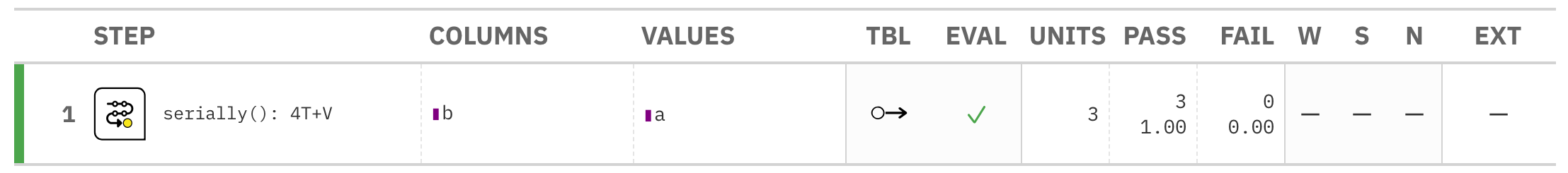

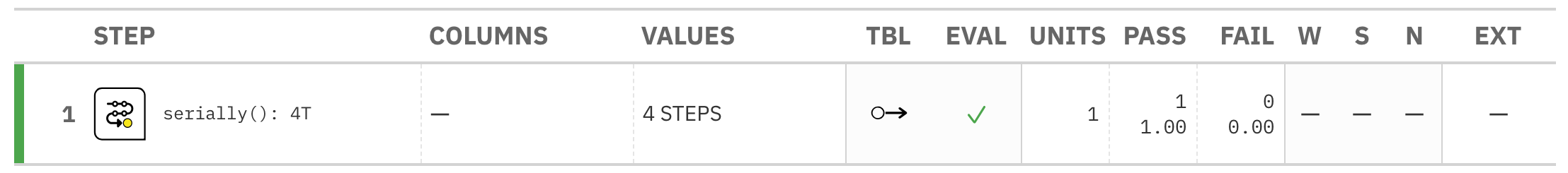

agent with validation functions and then interrogate()

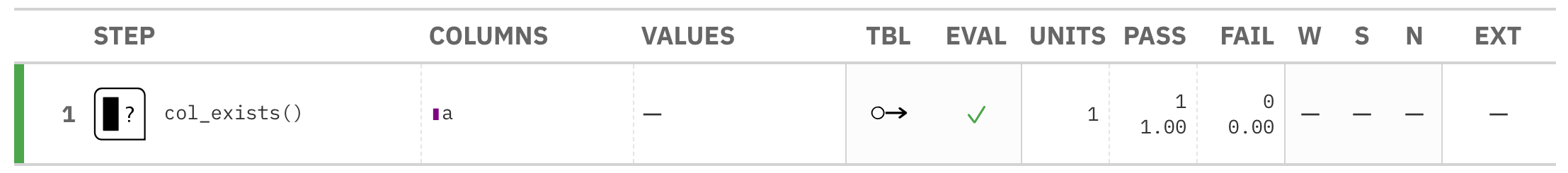

Validate that column a exists in the tbl table with col_exists().

agent <- create_agent(tbl = tbl) %>% col_exists(columns = a) %>% interrogate()

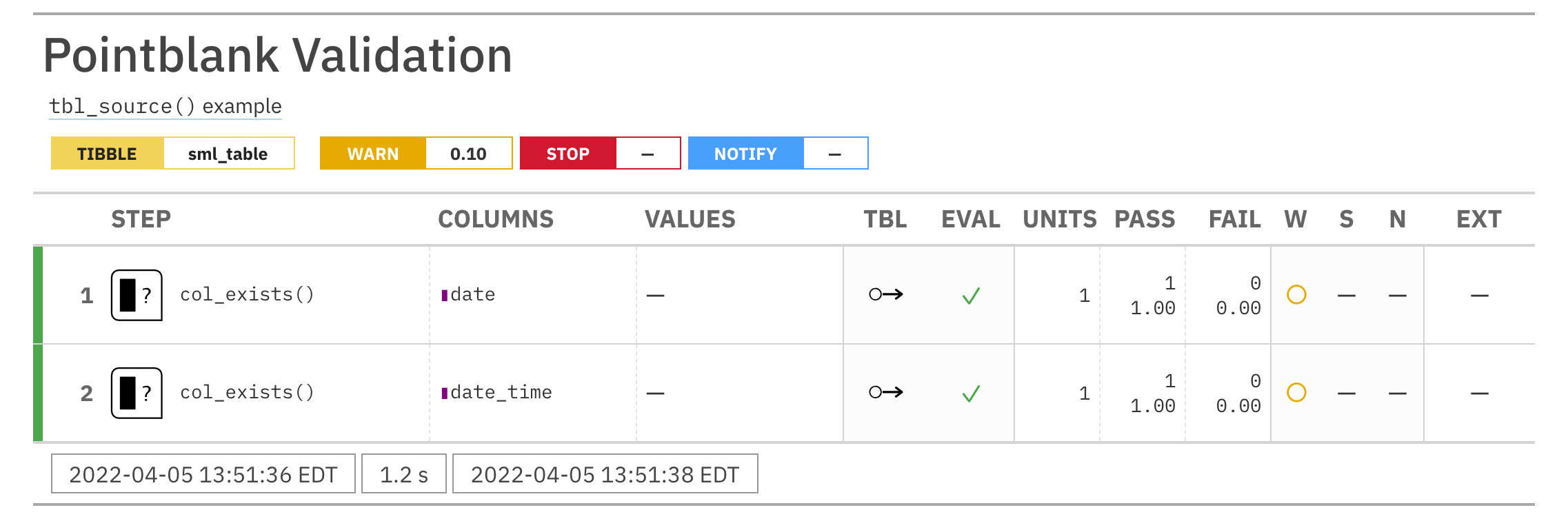

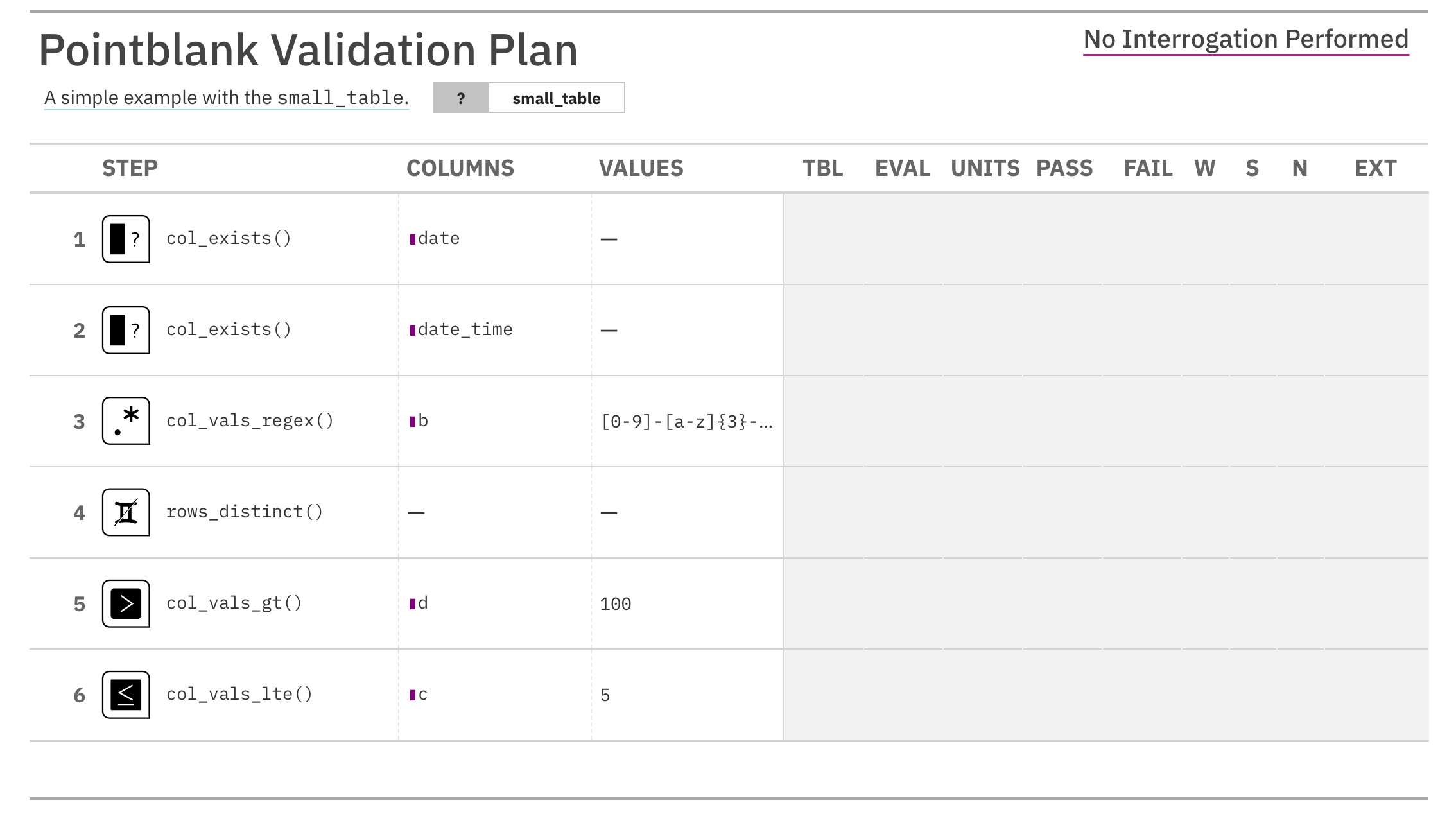

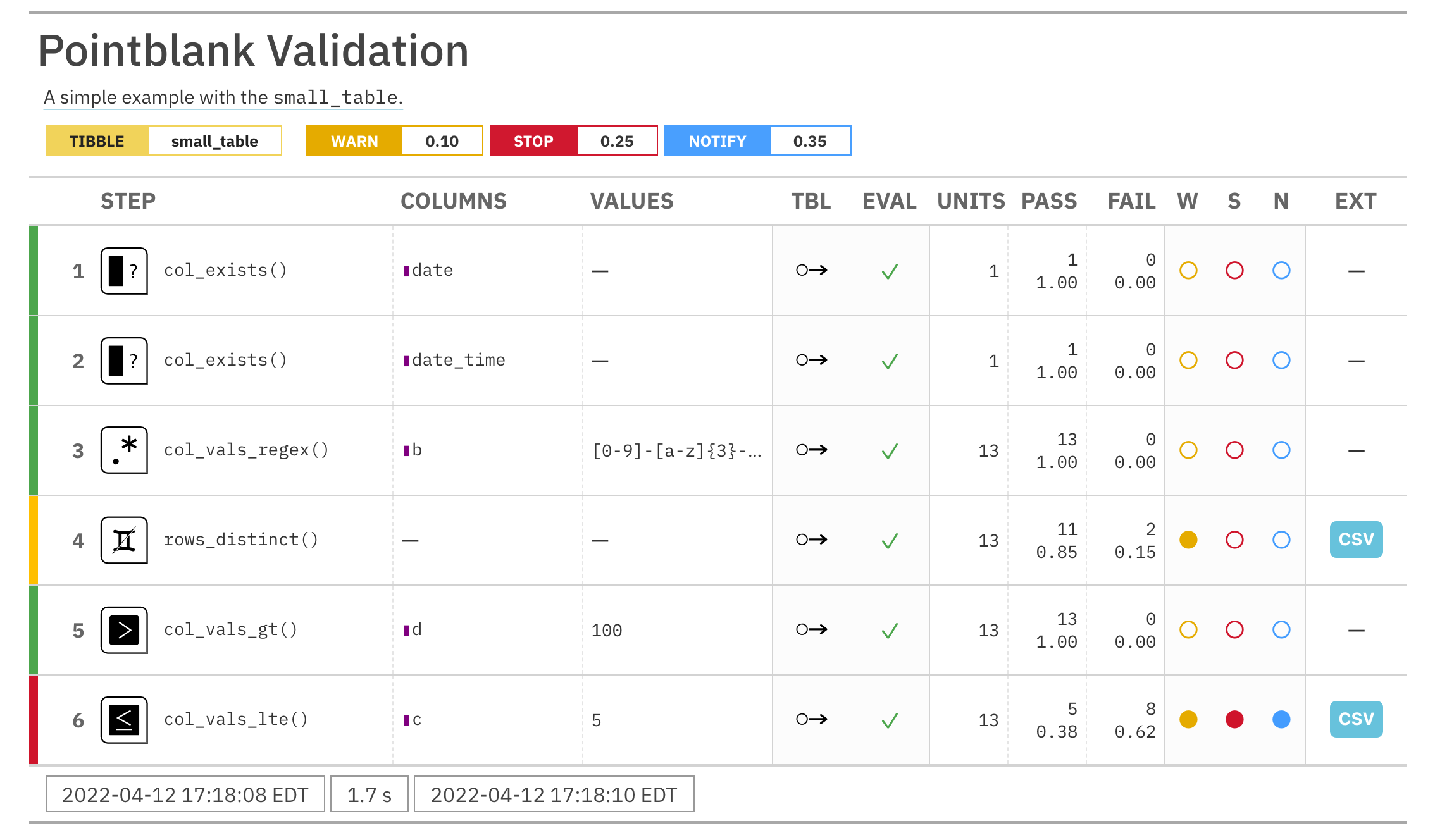

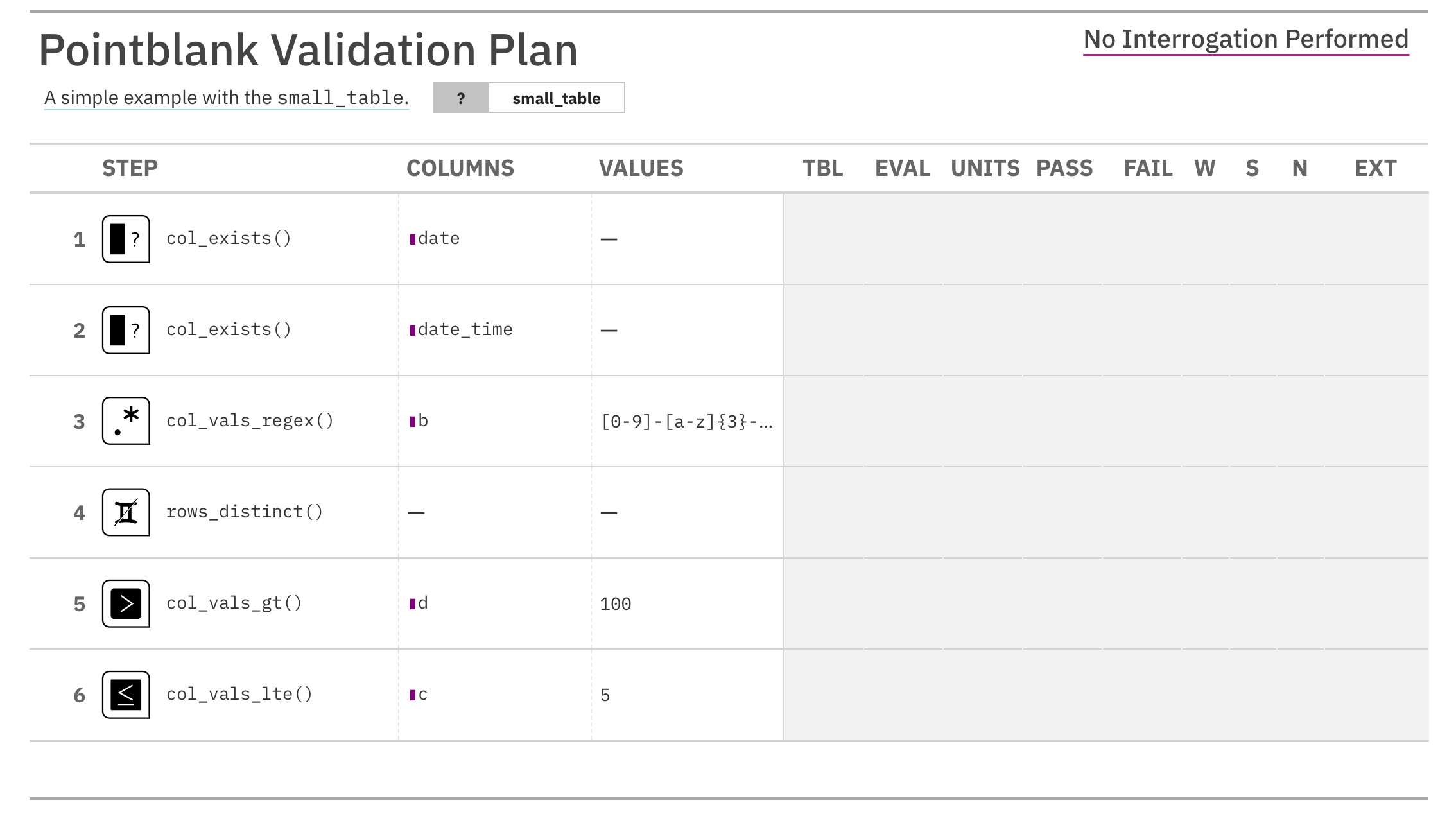

Printing the agent in the console shows the validation report in the

Viewer. Here is an excerpt of validation report, showing the single entry

that corresponds to the validation step demonstrated here.

agent)This way of using validation functions acts as a data filter. Data is

passed through but should stop() if there is a single test unit failing.

The behavior of side effects can be customized with the actions option.

tbl %>% col_exists(columns = a) #> # A tibble: 6 x 2 #> a b #> <dbl> <dbl> #> 1 5 7 #> 2 7 1 #> 3 6 0 #> 4 5 0 #> 5 8 0 #> 6 7 3

With the expect_*() form, we would typically perform one validation at a

time. This is primarily used in testthat tests.

expect_col_exists(tbl, columns = a)

With the test_*() form, we should get a single logical value returned to

us.

tbl %>% test_col_exists(columns = a) #> [1] TRUE

2-29

Other validation functions:

col_count_match(),

col_is_character(),

col_is_date(),

col_is_factor(),

col_is_integer(),

col_is_logical(),

col_is_numeric(),

col_is_posix(),

col_schema_match(),

col_vals_between(),

col_vals_decreasing(),

col_vals_equal(),

col_vals_expr(),

col_vals_gt(),

col_vals_gte(),

col_vals_in_set(),

col_vals_increasing(),

col_vals_lt(),

col_vals_lte(),

col_vals_make_set(),

col_vals_make_subset(),

col_vals_not_between(),

col_vals_not_equal(),

col_vals_not_in_set(),

col_vals_not_null(),

col_vals_null(),

col_vals_regex(),

col_vals_within_spec(),

conjointly(),

row_count_match(),

rows_complete(),

rows_distinct(),

serially(),

specially(),

tbl_match()

The col_is_character() validation function, the expect_col_is_character()

expectation function, and the test_col_is_character() test function all

check whether one or more columns in a table is of the character type. Like

many of the col_is_*()-type functions in pointblank, the only

requirement is a specification of the column names. The validation function

can be used directly on a data table or with an agent object (technically,

a ptblank_agent object) whereas the expectation and test functions can only

be used with a data table. Each validation step or expectation will operate

over a single test unit, which is whether the column is a character-type

column or not.

col_is_character( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_character(object, columns, threshold = 1) test_col_is_character(object, columns, threshold = 1)col_is_character( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_character(object, columns, threshold = 1) test_col_is_character(object, columns, threshold = 1)

x |

A pointblank agent or a data table

A data frame, tibble ( |

columns |

The target columns

A column-selecting expression, as one would use inside |

actions |

Thresholds and actions for different states

A list containing threshold levels so that the validation step can react

accordingly when exceeding the set levels for different states. This is to

be created with the |

step_id |

Manual setting of the step ID value

One or more optional identifiers for the single or multiple validation

steps generated from calling a validation function. The use of step IDs

serves to distinguish validation steps from each other and provide an

opportunity for supplying a more meaningful label compared to the step

index. By default this is |

label |

Optional label for the validation step

Optional label for the validation step. This label appears in the agent report and, for the best appearance, it should be kept quite short. See the Labels section for more information. |

brief |

Brief description for the validation step

A brief is a short, text-based description for the validation step. If

nothing is provided here then an autobrief is generated by the agent,

using the language provided in |

active |

Is the validation step active?

A logical value indicating whether the validation step should be active. If

the validation function is working with an agent, |

object |

A data table for expectations or tests

A data frame, tibble ( |

threshold |

The failure threshold

A simple failure threshold value for use with the expectation ( |

For the validation function, the return value is either a

ptblank_agent object or a table object (depending on whether an agent

object or a table was passed to x). The expectation function invisibly

returns its input but, in the context of testing data, the function is

called primarily for its potential side-effects (e.g., signaling failure).

The test function returns a logical value.

The types of data tables that are officially supported are:

data frames (data.frame) and tibbles (tbl_df)

Spark DataFrames (tbl_spark)

the following database tables (tbl_dbi):

PostgreSQL tables (using the RPostgres::Postgres() as driver)

MySQL tables (with RMySQL::MySQL())

Microsoft SQL Server tables (via odbc)

BigQuery tables (using bigrquery::bigquery())

DuckDB tables (through duckdb::duckdb())

SQLite (with RSQLite::SQLite())

Other database tables may work to varying degrees but they haven't been formally tested (so be mindful of this when using unsupported backends with pointblank).

columns may be a single column (as symbol a or string "a") or a vector

of columns (c(a, b, c) or c("a", "b", "c")). {tidyselect} helpers

are also supported, such as contains("date") and where(is.double). If

passing an external vector of columns, it should be wrapped in all_of().

When multiple columns are selected by columns, the result will be an

expansion of validation steps to that number of columns (e.g.,

c(col_a, col_b) will result in the entry of two validation steps).

Previously, columns could be specified in vars(). This continues to work,

but c() offers the same capability and supersedes vars() in columns.

Often, we will want to specify actions for the validation. This argument,

present in every validation function, takes a specially-crafted list object

that is best produced by the action_levels() function. Read that function's

documentation for the lowdown on how to create reactions to above-threshold

failure levels in validation. The basic gist is that you'll want at least a

single threshold level (specified as either the fraction of test units

failed, or, an absolute value), often using the warn_at argument. This is

especially true when x is a table object because, otherwise, nothing

happens. For the col_is_*()-type functions, using action_levels(warn_at = 1) or action_levels(stop_at = 1) are good choices depending on the

situation (the first produces a warning, the other will stop()).

label may be a single string or a character vector that matches the number

of expanded steps. label also supports {glue} syntax and exposes the

following dynamic variables contextualized to the current step:

"{.step}": The validation step name

"{.col}": The current column name

The glue context also supports ordinary expressions for further flexibility

(e.g., "{toupper(.step)}") as long as they return a length-1 string.

Want to describe this validation step in some detail? Keep in mind that this

is only useful if x is an agent. If that's the case, brief the agent

with some text that fits. Don't worry if you don't want to do it. The

autobrief protocol is kicked in when brief = NULL and a simple brief will

then be automatically generated.

A pointblank agent can be written to YAML with yaml_write() and the

resulting YAML can be used to regenerate an agent (with yaml_read_agent())

or interrogate the target table (via yaml_agent_interrogate()). When

col_is_character() is represented in YAML (under the top-level steps key

as a list member), the syntax closely follows the signature of the validation

function. Here is an example of how a complex call of col_is_character() as

a validation step is expressed in R code and in the corresponding YAML

representation.

R statement:

agent %>%

col_is_character(

columns = a,

actions = action_levels(warn_at = 0.1, stop_at = 0.2),

label = "The `col_is_character()` step.",

active = FALSE

)

YAML representation:

steps:

- col_is_character:

columns: c(a)

actions:

warn_fraction: 0.1

stop_fraction: 0.2

label: The `col_is_character()` step.

active: false

In practice, both of these will often be shorter as only the columns

argument requires a value. Arguments with default values won't be written to

YAML when using yaml_write() (though it is acceptable to include them with

their default when generating the YAML by other means). It is also possible

to preview the transformation of an agent to YAML without any writing to disk

by using the yaml_agent_string() function.

For all examples here, we'll use a simple table with a numeric column (a)

and a character column (b).

tbl <-

dplyr::tibble(

a = c(5, 7, 6, 5, 8, 7),

b = LETTERS[1:6]

)

tbl

#> # A tibble: 6 x 2

#> a b

#> <dbl> <chr>

#> 1 5 A

#> 2 7 B

#> 3 6 C

#> 4 5 D

#> 5 8 E

#> 6 7 F

We'll use this table with the different function variants.

agent with validation functions and then interrogate()

Validate that column b has the character class.

agent <- create_agent(tbl = tbl) %>% col_is_character(columns = b) %>% interrogate()

Printing the agent in the console shows the validation report in the

Viewer. Here is an excerpt of validation report, showing the single entry

that corresponds to the validation step demonstrated here.

agent)This way of using validation functions acts as a data filter. Data is passed

through but should stop() if there is a single test unit failing. The

behavior of side effects can be customized with the actions option.

tbl %>% col_is_character(columns = b) %>% dplyr::slice(1:5) #> # A tibble: 5 x 2 #> a b #> <dbl> <chr> #> 1 5 A #> 2 7 B #> 3 6 C #> 4 5 D #> 5 8 E

With the expect_*() form, we would typically perform one validation at a

time. This is primarily used in testthat tests.

expect_col_is_character(tbl, columns = b)

With the test_*() form, we should get a single logical value returned to

us.

tbl %>% test_col_is_character(columns = b) #> [1] TRUE

2-22

Other validation functions:

col_count_match(),

col_exists(),

col_is_date(),

col_is_factor(),

col_is_integer(),

col_is_logical(),

col_is_numeric(),

col_is_posix(),

col_schema_match(),

col_vals_between(),

col_vals_decreasing(),

col_vals_equal(),

col_vals_expr(),

col_vals_gt(),

col_vals_gte(),

col_vals_in_set(),

col_vals_increasing(),

col_vals_lt(),

col_vals_lte(),

col_vals_make_set(),

col_vals_make_subset(),

col_vals_not_between(),

col_vals_not_equal(),

col_vals_not_in_set(),

col_vals_not_null(),

col_vals_null(),

col_vals_regex(),

col_vals_within_spec(),

conjointly(),

row_count_match(),

rows_complete(),

rows_distinct(),

serially(),

specially(),

tbl_match()

Date objects?The col_is_date() validation function, the expect_col_is_date()

expectation function, and the test_col_is_date() test function all check

whether one or more columns in a table is of the R Date type. Like many

of the col_is_*()-type functions in pointblank, the only requirement is

a specification of the column names. The validation function can be used

directly on a data table or with an agent object (technically, a

ptblank_agent object) whereas the expectation and test functions can only

be used with a data table. Each validation step or expectation will operate

over a single test unit, which is whether the column is a Date-type column

or not.

col_is_date( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_date(object, columns, threshold = 1) test_col_is_date(object, columns, threshold = 1)col_is_date( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_date(object, columns, threshold = 1) test_col_is_date(object, columns, threshold = 1)

x |

A pointblank agent or a data table

A data frame, tibble ( |

columns |

The target columns

A column-selecting expression, as one would use inside |

actions |

Thresholds and actions for different states

A list containing threshold levels so that the validation step can react

accordingly when exceeding the set levels for different states. This is to

be created with the |

step_id |

Manual setting of the step ID value

One or more optional identifiers for the single or multiple validation

steps generated from calling a validation function. The use of step IDs

serves to distinguish validation steps from each other and provide an

opportunity for supplying a more meaningful label compared to the step

index. By default this is |

label |

Optional label for the validation step

Optional label for the validation step. This label appears in the agent report and, for the best appearance, it should be kept quite short. See the Labels section for more information. |

brief |

Brief description for the validation step

A brief is a short, text-based description for the validation step. If

nothing is provided here then an autobrief is generated by the agent,

using the language provided in |

active |

Is the validation step active?

A logical value indicating whether the validation step should be active. If

the validation function is working with an agent, |

object |

A data table for expectations or tests

A data frame, tibble ( |

threshold |

The failure threshold

A simple failure threshold value for use with the expectation ( |

For the validation function, the return value is either a

ptblank_agent object or a table object (depending on whether an agent

object or a table was passed to x). The expectation function invisibly

returns its input but, in the context of testing data, the function is

called primarily for its potential side-effects (e.g., signaling failure).

The test function returns a logical value.

The types of data tables that are officially supported are:

data frames (data.frame) and tibbles (tbl_df)

Spark DataFrames (tbl_spark)

the following database tables (tbl_dbi):

PostgreSQL tables (using the RPostgres::Postgres() as driver)

MySQL tables (with RMySQL::MySQL())

Microsoft SQL Server tables (via odbc)

BigQuery tables (using bigrquery::bigquery())

DuckDB tables (through duckdb::duckdb())

SQLite (with RSQLite::SQLite())

Other database tables may work to varying degrees but they haven't been formally tested (so be mindful of this when using unsupported backends with pointblank).

columns may be a single column (as symbol a or string "a") or a vector

of columns (c(a, b, c) or c("a", "b", "c")). {tidyselect} helpers

are also supported, such as contains("date") and where(is.double). If

passing an external vector of columns, it should be wrapped in all_of().

When multiple columns are selected by columns, the result will be an

expansion of validation steps to that number of columns (e.g.,

c(col_a, col_b) will result in the entry of two validation steps).

Previously, columns could be specified in vars(). This continues to work,

but c() offers the same capability and supersedes vars() in columns.

Often, we will want to specify actions for the validation. This argument,

present in every validation function, takes a specially-crafted list object

that is best produced by the action_levels() function. Read that function's

documentation for the lowdown on how to create reactions to above-threshold

failure levels in validation. The basic gist is that you'll want at least a

single threshold level (specified as either the fraction of test units

failed, or, an absolute value), often using the warn_at argument. This is

especially true when x is a table object because, otherwise, nothing

happens. For the col_is_*()-type functions, using action_levels(warn_at = 1) or action_levels(stop_at = 1) are good choices depending on the

situation (the first produces a warning, the other will stop()).

label may be a single string or a character vector that matches the number

of expanded steps. label also supports {glue} syntax and exposes the

following dynamic variables contextualized to the current step:

"{.step}": The validation step name

"{.col}": The current column name

The glue context also supports ordinary expressions for further flexibility

(e.g., "{toupper(.step)}") as long as they return a length-1 string.

Want to describe this validation step in some detail? Keep in mind that this

is only useful if x is an agent. If that's the case, brief the agent

with some text that fits. Don't worry if you don't want to do it. The

autobrief protocol is kicked in when brief = NULL and a simple brief will

then be automatically generated.

A pointblank agent can be written to YAML with yaml_write() and the

resulting YAML can be used to regenerate an agent (with yaml_read_agent())

or interrogate the target table (via yaml_agent_interrogate()). When

col_is_date() is represented in YAML (under the top-level steps key as a

list member), the syntax closely follows the signature of the validation

function. Here is an example of how a complex call of col_is_date() as a

validation step is expressed in R code and in the corresponding YAML

representation.

R statement:

agent %>%

col_is_date(

columns = a,

actions = action_levels(warn_at = 0.1, stop_at = 0.2),

label = "The `col_is_date()` step.",

active = FALSE

)

YAML representation:

steps:

- col_is_date:

columns: c(a)

actions:

warn_fraction: 0.1

stop_fraction: 0.2

label: The `col_is_date()` step.

active: false

In practice, both of these will often be shorter as only the columns

argument requires a value. Arguments with default values won't be written to

YAML when using yaml_write() (though it is acceptable to include them with

their default when generating the YAML by other means). It is also possible

to preview the transformation of an agent to YAML without any writing to disk

by using the yaml_agent_string() function.

The small_table dataset in the package has a date column. The following

examples will validate that that column is of the Date class.

small_table #> # A tibble: 13 x 8 #> date_time date a b c d e f #> <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> <chr> #> 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-345 3 3423. TRUE high #> 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-163 8 10000. TRUE low #> 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-938 3 2343. TRUE high #> 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-903 NA 3892. FALSE mid #> 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-038 7 284. TRUE low #> 6 2016-01-11 06:15:00 2016-01-11 4 2-dhe-923 4 3291. TRUE mid #> 7 2016-01-15 18:46:00 2016-01-15 7 1-knw-093 3 843. TRUE high #> 8 2016-01-17 11:27:00 2016-01-17 4 5-boe-639 2 1036. FALSE low #> 9 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 10 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 11 2016-01-26 20:07:00 2016-01-26 4 2-dmx-010 7 834. TRUE low #> 12 2016-01-28 02:51:00 2016-01-28 2 7-dmx-010 8 108. FALSE low #> 13 2016-01-30 11:23:00 2016-01-30 1 3-dka-303 NA 2230. TRUE high

agent with validation functions and then interrogate()

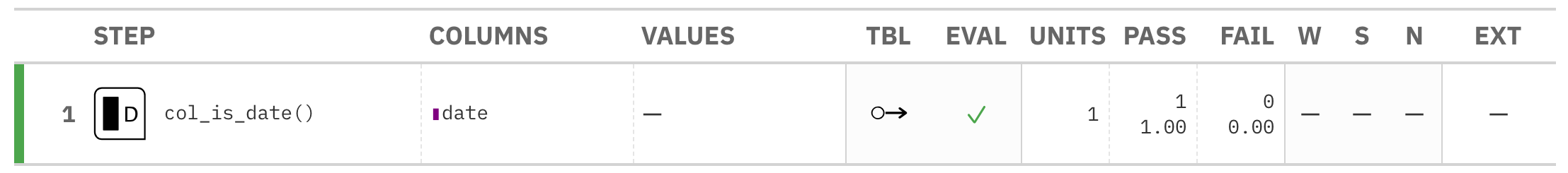

Validate that the column date has the Date class.

agent <- create_agent(tbl = small_table) %>% col_is_date(columns = date) %>% interrogate()

Printing the agent in the console shows the validation report in the

Viewer. Here is an excerpt of validation report, showing the single entry

that corresponds to the validation step demonstrated here.

agent)This way of using validation functions acts as a data filter. Data is passed

through but should stop() if there is a single test unit failing. The

behavior of side effects can be customized with the actions option.

small_table %>% col_is_date(columns = date) %>% dplyr::slice(1:5) #> # A tibble: 5 x 8 #> date_time date a b c d e f #> <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> <chr> #> 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-345 3 3423. TRUE high #> 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-163 8 10000. TRUE low #> 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-938 3 2343. TRUE high #> 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-903 NA 3892. FALSE mid #> 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-038 7 284. TRUE low

With the expect_*() form, we would typically perform one validation at a

time. This is primarily used in testthat tests.

expect_col_is_date(small_table, columns = date)

With the test_*() form, we should get a single logical value returned to

us.

small_table %>% test_col_is_date(columns = date) #> [1] TRUE

2-26

Other validation functions:

col_count_match(),

col_exists(),

col_is_character(),

col_is_factor(),

col_is_integer(),

col_is_logical(),

col_is_numeric(),

col_is_posix(),

col_schema_match(),

col_vals_between(),

col_vals_decreasing(),

col_vals_equal(),

col_vals_expr(),

col_vals_gt(),

col_vals_gte(),

col_vals_in_set(),

col_vals_increasing(),

col_vals_lt(),

col_vals_lte(),

col_vals_make_set(),

col_vals_make_subset(),

col_vals_not_between(),

col_vals_not_equal(),

col_vals_not_in_set(),

col_vals_not_null(),

col_vals_null(),

col_vals_regex(),

col_vals_within_spec(),

conjointly(),

row_count_match(),

rows_complete(),

rows_distinct(),

serially(),

specially(),

tbl_match()

factor objects?The col_is_factor() validation function, the expect_col_is_factor()

expectation function, and the test_col_is_factor() test function all check

whether one or more columns in a table is of the factor type. Like many of

the col_is_*()-type functions in pointblank, the only requirement is a

specification of the column names. The validation function can be used

directly on a data table or with an agent object (technically, a

ptblank_agent object) whereas the expectation and test functions can only

be used with a data table. Each validation step or expectation will operate

over a single test unit, which is whether the column is a factor-type column

or not.

col_is_factor( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_factor(object, columns, threshold = 1) test_col_is_factor(object, columns, threshold = 1)col_is_factor( x, columns, actions = NULL, step_id = NULL, label = NULL, brief = NULL, active = TRUE ) expect_col_is_factor(object, columns, threshold = 1) test_col_is_factor(object, columns, threshold = 1)

x |

A pointblank agent or a data table

A data frame, tibble ( |

columns |

The target columns

A column-selecting expression, as one would use inside |

actions |

Thresholds and actions for different states

A list containing threshold levels so that the validation step can react

accordingly when exceeding the set levels for different states. This is to

be created with the |

step_id |

Manual setting of the step ID value

One or more optional identifiers for the single or multiple validation

steps generated from calling a validation function. The use of step IDs

serves to distinguish validation steps from each other and provide an

opportunity for supplying a more meaningful label compared to the step

index. By default this is |

label |

Optional label for the validation step

Optional label for the validation step. This label appears in the agent report and, for the best appearance, it should be kept quite short. See the Labels section for more information. |

brief |

Brief description for the validation step

A brief is a short, text-based description for the validation step. If

nothing is provided here then an autobrief is generated by the agent,

using the language provided in |

active |

Is the validation step active?

A logical value indicating whether the validation step should be active. If

the validation function is working with an agent, |

object |

A data table for expectations or tests

A data frame, tibble ( |

threshold |

The failure threshold

A simple failure threshold value for use with the expectation ( |

For the validation function, the return value is either a

ptblank_agent object or a table object (depending on whether an agent

object or a table was passed to x). The expectation function invisibly

returns its input but, in the context of testing data, the function is

called primarily for its potential side-effects (e.g., signaling failure).

The test function returns a logical value.

The types of data tables that are officially supported are:

data frames (data.frame) and tibbles (tbl_df)

Spark DataFrames (tbl_spark)

the following database tables (tbl_dbi):

PostgreSQL tables (using the RPostgres::Postgres() as driver)

MySQL tables (with RMySQL::MySQL())

Microsoft SQL Server tables (via odbc)

BigQuery tables (using bigrquery::bigquery())

DuckDB tables (through duckdb::duckdb())

SQLite (with RSQLite::SQLite())

Other database tables may work to varying degrees but they haven't been formally tested (so be mindful of this when using unsupported backends with pointblank).

columns may be a single column (as symbol a or string "a") or a vector

of columns (c(a, b, c) or c("a", "b", "c")). {tidyselect} helpers

are also supported, such as contains("date") and where(is.double). If

passing an external vector of columns, it should be wrapped in all_of().

When multiple columns are selected by columns, the result will be an

expansion of validation steps to that number of columns (e.g.,

c(col_a, col_b) will result in the entry of two validation steps).

Previously, columns could be specified in vars(). This continues to work,

but c() offers the same capability and supersedes vars() in columns.

Often, we will want to specify actions for the validation. This argument,

present in every validation function, takes a specially-crafted list object

that is best produced by the action_levels() function. Read that function's

documentation for the lowdown on how to create reactions to above-threshold

failure levels in validation. The basic gist is that you'll want at least a

single threshold level (specified as either the fraction of test units

failed, or, an absolute value), often using the warn_at argument. This is

especially true when x is a table object because, otherwise, nothing

happens. For the col_is_*()-type functions, using action_levels(warn_at = 1) or action_levels(stop_at = 1) are good choices depending on the

situation (the first produces a warning, the other will stop()).

label may be a single string or a character vector that matches the number

of expanded steps. label also supports {glue} syntax and exposes the

following dynamic variables contextualized to the current step:

"{.step}": The validation step name

"{.col}": The current column name

The glue context also supports ordinary expressions for further flexibility

(e.g., "{toupper(.step)}") as long as they return a length-1 string.

Want to describe this validation step in some detail? Keep in mind that this

is only useful if x is an agent. If that's the case, brief the agent

with some text that fits. Don't worry if you don't want to do it. The

autobrief protocol is kicked in when brief = NULL and a simple brief will

then be automatically generated.

A pointblank agent can be written to YAML with yaml_write() and the

resulting YAML can be used to regenerate an agent (with yaml_read_agent())

or interrogate the target table (via yaml_agent_interrogate()). When

col_is_factor() is represented in YAML (under the top-level steps key as

a list member), the syntax closely follows the signature of the validation

function. Here is an example of how a complex call of col_is_factor() as a

validation step is expressed in R code and in the corresponding YAML

representation.

R statement:

agent %>%

col_is_factor(

columns = a,

actions = action_levels(warn_at = 0.1, stop_at = 0.2),

label = "The `col_is_factor()` step.",

active = FALSE

)

YAML representation:

steps:

- col_is_factor:

columns: c(a)

actions:

warn_fraction: 0.1

stop_fraction: 0.2

label: The `col_is_factor()` step.

active: false

In practice, both of these will often be shorter as only the columns

argument requires a value. Arguments with default values won't be written to

YAML when using yaml_write() (though it is acceptable to include them with

their default when generating the YAML by other means). It is also possible

to preview the transformation of an agent to YAML without any writing to disk

by using the yaml_agent_string() function.

Let's modify the f column in the small_table dataset so that the values

are factors instead of having the character class. The following examples

will validate that the f column was successfully mutated and now consists

of factors.

tbl <- small_table %>% dplyr::mutate(f = factor(f)) tbl #> # A tibble: 13 x 8 #> date_time date a b c d e f #> <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> <fct> #> 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-345 3 3423. TRUE high #> 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-163 8 10000. TRUE low #> 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-938 3 2343. TRUE high #> 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-903 NA 3892. FALSE mid #> 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-038 7 284. TRUE low #> 6 2016-01-11 06:15:00 2016-01-11 4 2-dhe-923 4 3291. TRUE mid #> 7 2016-01-15 18:46:00 2016-01-15 7 1-knw-093 3 843. TRUE high #> 8 2016-01-17 11:27:00 2016-01-17 4 5-boe-639 2 1036. FALSE low #> 9 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 10 2016-01-20 04:30:00 2016-01-20 3 5-bce-642 9 838. FALSE high #> 11 2016-01-26 20:07:00 2016-01-26 4 2-dmx-010 7 834. TRUE low #> 12 2016-01-28 02:51:00 2016-01-28 2 7-dmx-010 8 108. FALSE low #> 13 2016-01-30 11:23:00 2016-01-30 1 3-dka-303 NA 2230. TRUE high

agent with validation functions and then interrogate()

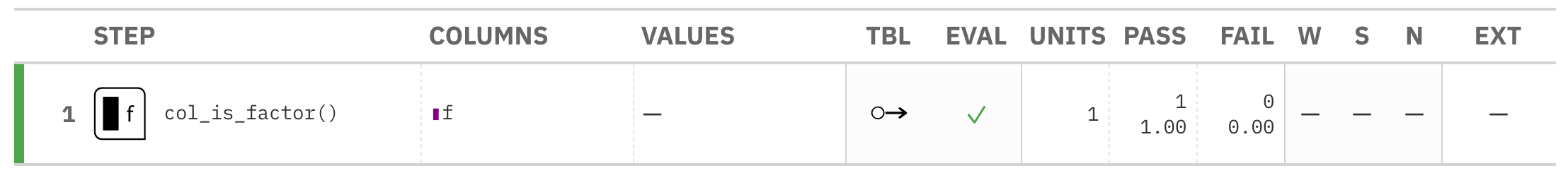

Validate that the column f in the tbl object is of the factor class.

agent <- create_agent(tbl = tbl) %>% col_is_factor(columns = f) %>% interrogate()

Printing the agent in the console shows the validation report in the

Viewer. Here is an excerpt of validation report, showing the single entry

that corresponds to the validation step demonstrated here.

agent)This way of using validation functions acts as a data filter. Data is passed

through but should stop() if there is a single test unit failing. The

behavior of side effects can be customized with the actions option.

tbl %>% col_is_factor(columns = f) %>% dplyr::slice(1:5) #> # A tibble: 5 x 8 #> date_time date a b c d e f #> <dttm> <date> <int> <chr> <dbl> <dbl> <lgl> <fct> #> 1 2016-01-04 11:00:00 2016-01-04 2 1-bcd-345 3 3423. TRUE high #> 2 2016-01-04 00:32:00 2016-01-04 3 5-egh-163 8 10000. TRUE low #> 3 2016-01-05 13:32:00 2016-01-05 6 8-kdg-938 3 2343. TRUE high #> 4 2016-01-06 17:23:00 2016-01-06 2 5-jdo-903 NA 3892. FALSE mid #> 5 2016-01-09 12:36:00 2016-01-09 8 3-ldm-038 7 284. TRUE low

With the expect_*() form, we would typically perform one validation at a

time. This is primarily used in testthat tests.

expect_col_is_factor(tbl, f)

With the test_*() form, we should get a single logical value returned to

us.

tbl %>% test_col_is_factor(columns = f) #> [1] TRUE

2-28

Other validation functions:

col_count_match(),

col_exists(),

col_is_character(),

col_is_date(),

col_is_integer(),

col_is_logical(),

col_is_numeric(),

col_is_posix(),

col_schema_match(),

col_vals_between(),

col_vals_decreasing(),

col_vals_equal(),

col_vals_expr(),

col_vals_gt(),

col_vals_gte(),

col_vals_in_set(),

col_vals_increasing(),

col_vals_lt(),

col_vals_lte(),

col_vals_make_set(),

col_vals_make_subset(),

col_vals_not_between(),

col_vals_not_equal(),

col_vals_not_in_set(),

col_vals_not_null(),

col_vals_null(),

col_vals_regex(),

col_vals_within_spec(),

conjointly(),

row_count_match(),

rows_complete(),

rows_distinct(),

serially(),

specially(),

tbl_match()

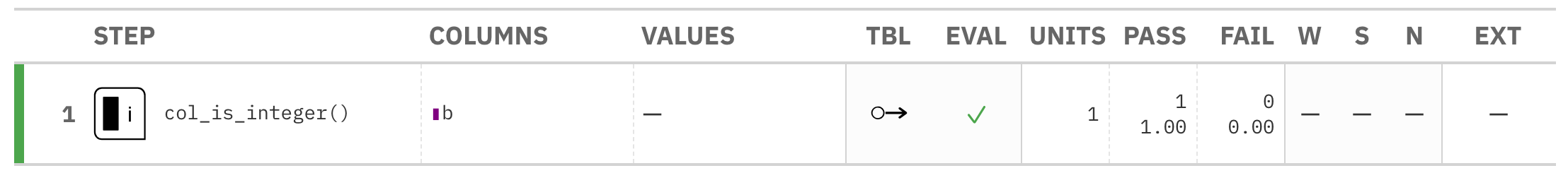

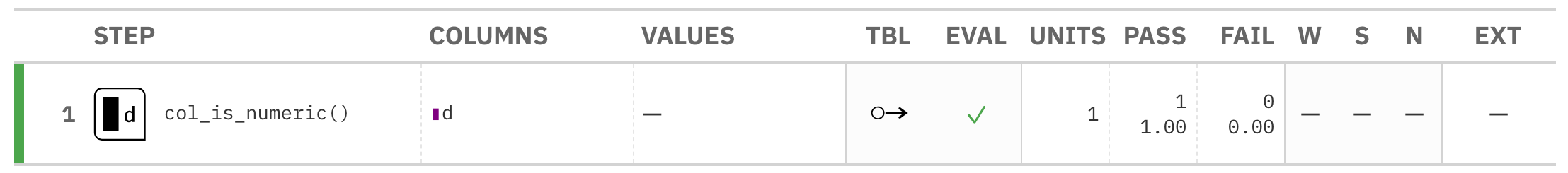

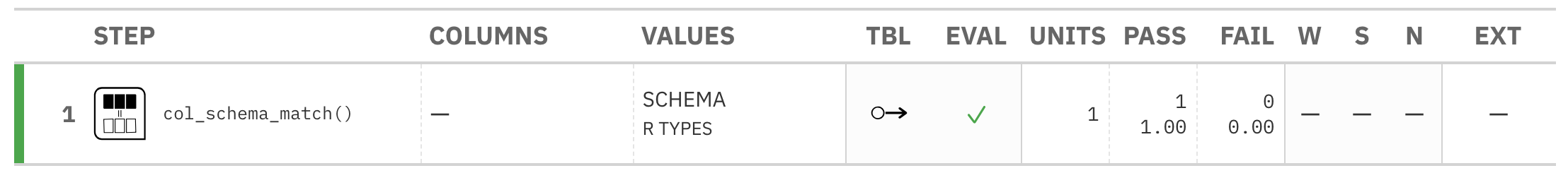

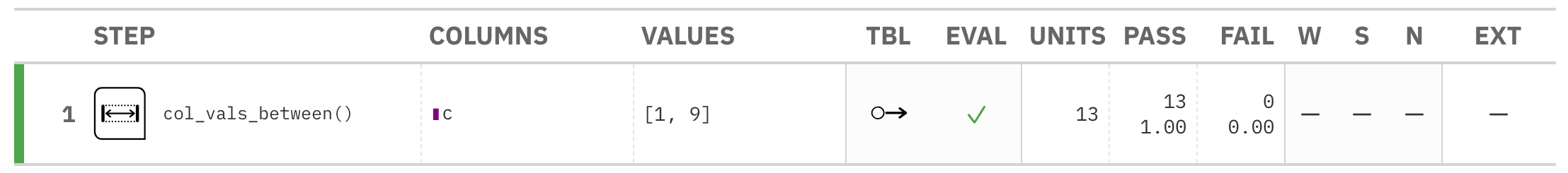

The col_is_integer() validation function, the expect_col_is_integer()

expectation function, and the test_col_is_integer() test function all check

whether one or more columns in a table is of the integer type. Like many of

the col_is_*()-type functions in pointblank, the only requirement is a

specification of the column names. The validation function can be used

directly on a data table or with an agent object (technically, a